MIG: Difference between revisions

| (6 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

== NVIDIA Multi-Instance GPU == | == NVIDIA Multi-Instance GPU == | ||

NVIDIA introduced MIG(Multi-Instance GPU) since Ampere architecture in 2020. | NVIDIA introduced MIG(Multi-Instance GPU) since Ampere architecture in 2020. | ||

If we use a single GPU to run more than one application, those applications compete on using GPU resources which is known as '''resource contention. However, with MIG, an GPU instance is completely put aside for a specific application.''' MIG feature allows a single GPU into multiple fully isolated virtual GPU devices that are efficiently sized per-user-case, specifically smaller use-cases that only require a subset of GPU resources. | If we use a single GPU to run more than one application, those applications compete on using GPU resources which is known as '''resource contention. However, with MIG, an GPU instance is completely put aside for a specific application.''' MIG feature allows a single GPU into multiple fully isolated virtual GPU devices that are efficiently sized per-user-case, specifically smaller use-cases that only require a subset of GPU resources. | ||

MIG ensures to providing each instance's processors have separate and isolated paths through the entire memory system - the on-chip crossbar ports, L2 cache banks, memory controllers, and DRAM address busses are all assigned uniquely to an individual instanceenhanced isolation GPU resources. | MIG ensures to providing each instance's processors have separate and isolated paths through the entire memory system - the on-chip crossbar ports, L2 cache banks, memory controllers, and DRAM address busses are all assigned uniquely to an individual instanceenhanced isolation GPU resources. | ||

| Line 42: | Line 40: | ||

== MIG Configuration (profile) == | == MIG Configuration (profile) == | ||

GPU reset required to enable or disable MIG mode, this is one-time operation per GPU and persists across system reboots. | GPU persistence mode and GPU reset required to enable or disable MIG mode, this is one-time operation per GPU and persists across system reboots. | ||

The number of slices that a GPU can be created with is not arbitrary. The [[NVIDIA driver]] APIs provide a number of “GPU Instance Profiles” and users can create GIs by specifying one of these profiles. 18 combinations possible. | |||

The number of slices that a | |||

<syntaxhighlight lang="bash"> | <syntaxhighlight lang="bash"> | ||

| Line 77: | Line 73: | ||

| 8 7 1 | | | 8 7 1 | | ||

+-----------------------------------------------------------------------------+ | +-----------------------------------------------------------------------------+ | ||

</syntaxhighlight> | </syntaxhighlight> | ||

== Enable MIG Mode == | |||

When MIG is enabled on the GPU, depending on the GPU product, the driver will attempt to reset the GPU so that MIG mode can take effect. <syntaxhighlight lang="bash"> | |||

$ sudo nvidia-smi -pm 1 # persistence mode is required to enable MIG | |||

Enabled Legacy persistence mode for GPU 00000000:4F:00.0. | |||

All done. | |||

$ sudo nvidia-smi -mig 1 # Enable MIG | |||

Enabled MIG Mode for GPU 00000000:4F:00.0 | |||

All done. | |||

#Verify enabled MIG mode | |||

$ nvidia-smi --query-gpu=pci.bus_id,mig.mode.current --format=csv | |||

pci.bus_id, mig.mode.current | |||

00000000:4F:00.0, Enabled | |||

</syntaxhighlight>If encounter a message saying “Warning: MIG mode is in pending enable state for GPU XXX:XX:XX.X:In use by another client …”, when enabling MIG. you need to stop '''nvsm''' and '''dcgm''', then try again.<ref>https://docs.nvidia.com/datacenter/tesla/mig-user-guide/</ref> | |||

$ sudo systemctl stop nvsm | $ sudo systemctl stop nvsm | ||

$ sudo systemctl stop dcgm | $ sudo systemctl stop dcgm | ||

$ sudo nvidia-smi -i 0 -mig 1 | $ sudo nvidia-smi -i 0 -mig 1 | ||

<nowiki>**</nowiki> Reference [https://docs.nvidia.com/datacenter/tesla/mig-user-guide/ MIG user guide] for various post processing of MIG configuration change to take effect. | <nowiki>**</nowiki> Reference [https://docs.nvidia.com/datacenter/tesla/mig-user-guide/ MIG user guide] for various post processing of MIG configuration change to take effect. | ||

== List GPU Instance Profiles == | == List GPU Instance Profiles == | ||

List the possible placements available using the following command. it would be helpful to review [https://cloud.redhat.com/blog/using-nvidia-a100s-multi-instance-gpu-to-run-multiple-workloads-in-parallel-on-a-single-gpu performance of different MIG instance size] using [[MLPerf]] with Single Shot MultiBox Detector (SSD) training [[benchmark]]. | List the possible placements available using the following command. it would be helpful to review [https://cloud.redhat.com/blog/using-nvidia-a100s-multi-instance-gpu-to-run-multiple-workloads-in-parallel-on-a-single-gpu performance of different MIG instance size] using [[MLPerf]] with Single Shot MultiBox Detector (SSD) training [[benchmark]]. | ||

< | The syntax of the placement is {<index>}:<GPU Slice Count> and shows the placement of the instances on the GPU <syntaxhighlight lang="bash"> | ||

#H100 NVL, list possible placements | |||

#The syntax of the placement output is {<index>}:<GPU Slice Count> and shows the placement of the instances on the GPU | |||

$ nvidia-smi mig -lgipp | |||

GPU 0 Profile ID 19 Placements: {0,1,2,3,4,5,6}:1 | |||

GPU 0 Profile ID 20 Placements: {0,1,2,3,4,5,6}:1 | |||

GPU 0 Profile | GPU 0 Profile ID 15 Placements: {0,2,4,6}:2 | ||

GPU 0 Profile ID 14 Placements: {0,2,4}:2 | |||

GPU 0 Profile ID 9 Placements: {0,4}:4 | |||

GPU 0 Profile ID 5 Placement : {0}:4 | |||

GPU 0 Profile ID 0 Placement : {0}:8 | |||

</syntaxhighlight> | |||

== Creating GPU Instances == | |||

Without creating GPU instances (including corresponding compute instances), CUDA workloads cannot be run on the GPU. | |||

Creating GPU instances using the <samp>-cgi</samp> and create the corresponding Compute Instances (CI). By using the <samp>-C</samp> option | |||

<syntaxhighlight lang="bash"> | |||

# no GPU instances yet | |||

$ nvidia-smi mig -lgi | |||

No GPU instances found: Not Found | |||

# Create 9,19,19,19 partitions | |||

# -cgi : this flag specifies the MIG partition configuration. The numbers following the flag represent the MIG partitions for each of the four MIG device slices. In this case, there are four slices with configurations 9, 19, 19, and 19 compute instances each. These numbers correspond to the profile IDs retrieved previously | |||

# creates the corresponding compute instances for the MIG partitions automatically | |||

$ sudo nvidia-smi mig -cgi 9,19,19,19 -C | |||

Successfully created GPU instance ID 2 on GPU 0 using profile MIG 3g.47gb (ID 9) | |||

Successfully created compute instance ID 0 on GPU 0 GPU instance ID 2 using profile MIG 3g.47gb (ID 2) | |||

Successfully created GPU instance ID 7 on GPU 0 using profile MIG 1g.12gb (ID 19) | |||

Successfully created compute instance ID 0 on GPU 0 GPU instance ID 7 using profile MIG 1g.12gb (ID 0) | |||

Successfully created GPU instance ID 8 on GPU 0 using profile MIG 1g.12gb (ID 19) | |||

Successfully created compute instance ID 0 on GPU 0 GPU instance ID 8 using profile MIG 1g.12gb (ID 0) | |||

Successfully created GPU instance ID 9 on GPU 0 using profile MIG 1g.12gb (ID 19) | |||

Successfully created compute instance ID 0 on GPU 0 GPU instance ID 9 using profile MIG 1g.12gb (ID 0) | |||

# List current MIG GPU instances | |||

$ sudo nvidia-smi mig -lgi | |||

+-------------------------------------------------------+ | |||

| GPU instances: | | |||

| GPU Name Profile Instance Placement | | |||

| ID ID Start:Size | | |||

|=======================================================| | |||

| 0 MIG 1g.12gb 19 7 0:1 | | |||

+-------------------------------------------------------+ | |||

| 0 MIG 1g.12gb 19 8 1:1 | | |||

+-------------------------------------------------------+ | |||

| 0 MIG 1g.12gb 19 9 2:1 | | |||

+-------------------------------------------------------+ | |||

| 0 MIG 3g.47gb 9 2 4:4 | | |||

+-------------------------------------------------------+ | |||

</syntaxhighlight>List up MIG devices<syntaxhighlight lang="bash"> | |||

# Show GPU UUID, docker container can use the UUID | |||

$ nvidia-smi -L | |||

GPU 0: NVIDIA H100 NVL (UUID: GPU-d2481ba7-bc0a-5ff0-0d5b-b4ba1d4d56c5) | |||

MIG 3g.47gb Device 0: (UUID: MIG-45174f60-85a9-51f4-bc61-f6dc57dc60e1) | |||

MIG 1g.12gb Device 1: (UUID: MIG-b6bbb81c-71b7-54a2-a621-9b2a12215cab) | |||

MIG 1g.12gb Device 2: (UUID: MIG-ea284e9f-1cfb-5980-8948-bc86b7c1ae71) | |||

MIG 1g.12gb Device 3: (UUID: MIG-2bbae2fb-2ef8-5917-badf-b0571b1381cf) | |||

== | # Show physical GPU and MIG devices | ||

$ nvidia-smi | |||

Sun May 25 22:19:13 2025 | |||

+-----------------------------------------------------------------------------------------+ | |||

| NVIDIA-SMI 570.124.04 Driver Version: 570.124.04 CUDA Version: 12.8 | | |||

|-----------------------------------------+------------------------+----------------------+ | |||

| GPU Name Persistence-M | Bus-Id Disp.A | Volatile Uncorr. ECC | | |||

| Fan Temp Perf Pwr:Usage/Cap | Memory-Usage | GPU-Util Compute M. | | |||

| | | MIG M. | | |||

|=========================================+========================+======================| | |||

| 0 NVIDIA H100 NVL On | 00000000:4F:00.0 Off | On | | |||

| N/A 38C P0 58W / 400W | 87MiB / 95830MiB | N/A Default | | |||

| | | Enabled | | |||

+-----------------------------------------+------------------------+----------------------+ | |||

+-----------------------------------------------------------------------------------------+ | |||

| MIG devices: | | |||

+------------------+----------------------------------+-----------+-----------------------+ | |||

| GPU GI CI MIG | Memory-Usage | Vol| Shared | | |||

| ID ID Dev | BAR1-Usage | SM Unc| CE ENC DEC OFA JPG | | |||

| | | ECC| | | |||

|==================+==================================+===========+=======================| | |||

| 0 2 0 0 | 44MiB / 47488MiB | 60 0 | 3 0 3 0 3 | | |||

| | 0MiB / 65535MiB | | | | |||

+------------------+----------------------------------+-----------+-----------------------+ | |||

| 0 7 0 1 | 15MiB / 11008MiB | 16 0 | 1 0 1 0 1 | | |||

| | 0MiB / 16383MiB | | | | |||

+------------------+----------------------------------+-----------+-----------------------+ | |||

| 0 8 0 2 | 15MiB / 11008MiB | 16 0 | 1 0 1 0 1 | | |||

| | 0MiB / 16383MiB | | | | |||

+------------------+----------------------------------+-----------+-----------------------+ | |||

| 0 9 0 3 | 15MiB / 11008MiB | 16 0 | 1 0 1 0 1 | | |||

| | 0MiB / 16383MiB | | | | |||

+------------------+----------------------------------+-----------+-----------------------+ | |||

| Processes: | | |||

| GPU GI CI PID Type Process name GPU Memory | | |||

| ID ID Usage | | |||

|=========================================================================================| | |||

| No running processes found | | |||

+-----------------------------------------------------------------------------------------+ | |||

</syntaxhighlight> | |||

# | == Use MIG device == | ||

<syntaxhighlight lang="bash"> | |||

# To use specific GPU instance, need to check device ID or MIG UUID | |||

$ nvidia-smi -L | |||

GPU 0: NVIDIA H100 NVL (UUID: GPU-d2481ba7-bc0a-5ff0-0d5b-b4ba1d4d56c5) | |||

MIG 3g.47gb Device 0: (UUID: MIG-45174f60-85a9-51f4-bc61-f6dc57dc60e1) | |||

MIG 1g.12gb Device 1: (UUID: MIG-b6bbb81c-71b7-54a2-a621-9b2a12215cab) | |||

MIG 1g.12gb Device 2: (UUID: MIG-ea284e9f-1cfb-5980-8948-bc86b7c1ae71) | |||

MIG 1g.12gb Device 3: (UUID: MIG-2bbae2fb-2ef8-5917-badf-b0571b1381cf) | |||

# Above list shows, GPU ID0 has MIG device ID 0 ~ 3, in short we can access MIG GI as | |||

# GPUID:MIG DeviceID, for example, 0:0, 0:1, 0:2 and 0:3 respectively | |||

# Use MIG device 0:2 using UUID in docker container | |||

$ docker run --gpus '"device=MIG-ea284e9f-1cfb-5980-8948-bc86b7c1ae71"' --rm nvidia/cuda:11.8.0-base-ubuntu20.04 nvidia-smi | |||

Sun May 25 22:56:04 2025 | |||

+-----------------------------------------------------------------------------------------+ | |||

| NVIDIA-SMI 570.124.04 Driver Version: 570.124.04 CUDA Version: 12.8 | | |||

|-----------------------------------------+------------------------+----------------------+ | |||

| GPU Name Persistence-M | Bus-Id Disp.A | Volatile Uncorr. ECC | | |||

| Fan Temp Perf Pwr:Usage/Cap | Memory-Usage | GPU-Util Compute M. | | |||

| | | MIG M. | | |||

|=========================================+========================+======================| | |||

| 0 NVIDIA H100 NVL On | 00000000:4F:00.0 Off | On | | |||

| N/A 39C P0 59W / 400W | N/A | N/A Default | | |||

| | | Enabled | | |||

+-----------------------------------------+------------------------+----------------------+ | |||

+-----------------------------------------------------------------------------------------+ | |||

| MIG devices: | | |||

| | +------------------+----------------------------------+-----------+-----------------------+ | ||

| GPU GI CI MIG | Memory-Usage | Vol| Shared | | |||

| ID ID Dev | BAR1-Usage | SM Unc| CE ENC DEC OFA JPG | | |||

| | | ECC| | | |||

|==================+==================================+===========+=======================| | |||

| 0 8 0 0 | 15MiB / 11008MiB | 16 0 | 1 0 1 0 1 | | |||

| | 0MiB / 16383MiB | | | | |||

+------------------+----------------------------------+-----------+-----------------------+ | |||

| Processes: | | |||

| GPU GI CI PID Type Process name GPU Memory | | |||

| ID ID Usage | | |||

|=========================================================================================| | |||

| No running processes found | | |||

+-----------------------------------------------------------------------------------------+ | |||

# In short, we can also use GPUID:MIG DeviceID instead of UUID | |||

$ docker run --gpus '"device=0:1"' --rm nvidia/cuda:11.8.0-base-ubuntu20.04 nvidia-smi | |||

Sun May 25 22:56:47 2025 | |||

+-----------------------------------------------------------------------------------------+ | |||

| NVIDIA-SMI 570.124.04 Driver Version: 570.124.04 CUDA Version: 12.8 | | |||

|-----------------------------------------+------------------------+----------------------+ | |||

| GPU Name Persistence-M | Bus-Id Disp.A | Volatile Uncorr. ECC | | |||

| Fan Temp Perf Pwr:Usage/Cap | Memory-Usage | GPU-Util Compute M. | | |||

| | | MIG M. | | |||

|=========================================+========================+======================| | |||

| 0 NVIDIA H100 NVL On | 00000000:4F:00.0 Off | On | | |||

| N/A 39C P0 59W / 400W | N/A | N/A Default | | |||

| | | Enabled | | |||

+-----------------------------------------+------------------------+----------------------+ | |||

| MIG devices: | | |||

+------------------+----------------------------------+-----------+-----------------------+ | |||

| GPU GI CI MIG | Memory-Usage | Vol| Shared | | |||

| ID ID Dev | BAR1-Usage | SM Unc| CE ENC DEC OFA JPG | | |||

| | | ECC| | | |||

|==================+==================================+===========+=======================| | |||

| 0 7 0 0 | 15MiB / 11008MiB | 16 0 | 1 0 1 0 1 | | |||

| | 0MiB / 16383MiB | | | | |||

+------------------+----------------------------------+-----------+-----------------------+ | |||

| Processes: | | |||

| GPU GI CI PID Type Process name GPU Memory | | |||

| ID ID Usage | | |||

|=========================================================================================| | |||

| No running processes found | | |||

+-----------------------------------------------------------------------------------------+ | |||

</syntaxhighlight> | |||

== Destroying GPU Instances == | == Destroying GPU Instances == | ||

<kbd> | |||

=== <kbd>Destroy all the CIs and GIs</kbd> === | |||

Successfully destroyed compute instance ID 0 from GPU 0 GPU instance ID | <syntaxhighlight lang="bash"> | ||

$ sudo nvidia-smi mig -dci && sudo nvidia-smi mig -dgi | |||

Successfully destroyed compute instance ID 0 from GPU 0 GPU instance ID 7 | |||

Successfully destroyed GPU instance ID | Successfully destroyed compute instance ID 0 from GPU 0 GPU instance ID 8 | ||

Successfully destroyed GPU instance ID 2 from GPU 0 | Successfully destroyed compute instance ID 0 from GPU 0 GPU instance ID 9 | ||

Successfully destroyed compute instance ID 0 from GPU 0 GPU instance ID 2 | |||

Successfully destroyed GPU instance ID 7 from GPU 0 | |||

Successfully destroyed GPU instance ID 8 from GPU 0 | |||

Successfully destroyed GPU instance ID 9 from GPU 0 | |||

Successfully destroyed GPU instance ID 2 from GPU 0 | |||

</syntaxhighlight> | |||

=== Delete the specific CIs created under GI 1 === | |||

<kbd>$ sudo nvidia-smi mig -dci -ci 0,1,2 -gi 1</kbd> | <kbd>$ sudo nvidia-smi mig -dci -ci 0,1,2 -gi 1</kbd> | ||

Successfully destroyed compute instance ID 0 from GPU 0 GPU instance ID 1 | Successfully destroyed compute instance ID 0 from GPU 0 GPU instance ID 1 | ||

Successfully destroyed compute instance ID 1 from GPU 0 GPU instance ID 1 | Successfully destroyed compute instance ID 1 from GPU 0 GPU instance ID 1 | ||

Successfully destroyed compute instance ID 2 from GPU 0 GPU instance ID 1 | Successfully destroyed compute instance ID 2 from GPU 0 GPU instance ID 1 | ||

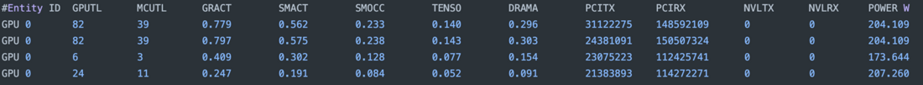

== <kbd>Monitoring MIG Devices</kbd> == | == <kbd>Monitoring MIG Devices</kbd> == | ||

Nvidia-smi does not [[support]] utilization metrics to MIG devices. The utilization is displayed as N/A when running CUDA programs. | |||

For monitoring MIG devices, Nvidia recommendes to use NVIDIA DCGM v2.0.13 or later. | |||

For more information | |||

* [https://servicedesk.surf.nl/wiki/spaces/WIKI/pages/92668151/dcgmi+dmon How to use DCGM] | |||

* [https://docs.nvidia.com/datacenter/dcgm/latest/user-guide/feature-overview.html#profiling-metrics DCGM document] | |||

<syntaxhighlight lang="bash"> | |||

$dcgmi dmon -e 203,204,1001,1002,1003,1004,1005,1009,1010,1011,1012,155 -i <GPU IDs> | |||

* -e indicate the metrics we want to query. (GPU Activity (1001), Tensor Core Utilization (1004), etc) | |||

query metrics is defined in https://github.com/NVIDIA/DCGM/blob/master/dcgmlib/dcgm_fields.h | |||

</syntaxhighlight> | |||

[[File:Dcgmi-gpu-utilization.png|center|frameless|923x923px|dcgmi-gpu-utilization]] | |||

== MIG and Multi-Process Service<ref>https://docs.nvidia.com/deploy/mps/index.html</ref> == | |||

MPS ([https://docs.nvidia.com/deploy/mps/index.html Multi-Process Service]) and MIG can work together, potentially achieving even higher levels of utilization for certain workloads. | |||

=== MIG and MPS Workflow === | |||

* Configure the desired MIG geometry on the GPU. | |||

* Setup the CUDA_MPS_PIPE_DIRECTORY variable to point to unique directories so that the multiple MPS servers and clients can communicate with each other using named pipes and Unix domain sockets. | |||

* Launch the application by specifying the MIG device using CUDA_VISIBLE_DEVICES | |||

== MIG limitation == | == MIG limitation == | ||

| Line 184: | Line 326: | ||

* P2P not available (no NVLink) | * P2P not available (no NVLink) | ||

== MIG | == NVIDIA Display Mode <ref>https://developer.nvidia.com/displaymodeselector</ref> == | ||

The NVIDIA Display Mode Selector Tool is a utility designed to configure the ideal display mode for various specialized applications, such as CAVES, Virtual Production, Location-based Entertainment, and Universal Multi-instance GPU (MIG). It is compatible with the following GPUs: | |||

* NVIDIA RTX PRO 6000 Blackwell Server Edition, | |||

* NVIDIA RTX PRO 6000 Blackwell Workstation Edition, | |||

* NVIDIA RTX PRO 6000 Blackwell Max-Q Workstation Edition, | |||

* NVIDIA RTX PRO 5000 Blackwell, | |||

* NVIDIA L40S, | |||

* NVIDIA L40, | |||

* NVIDIA RTX 6000 Ada, | |||

* NVIDIA RTX 5000 Ada, | |||

* NVIDIA A40, | |||

* NVIDIA RTX A5000, | |||

* NVIDIA RTX A5500 | |||

* NVIDIA RTX A6000 GPUs. | |||

=== Additional Prerequisites for RTX PRO Blackwell GPUs === | |||

Display mode by default will be set to graphics, this must be set to compute using DisplayModeSelector (>=1.72.0) before MIG can be enabled for Workstation Edition and Max-Q Workstation Edition GPUs.<ref>https://docs.nvidia.com/datacenter/tesla/pdf/MIG_User_Guide.pdf</ref> | |||

Verify the GPU vBIOS version meets minimum versions listed in the following table. | |||

{| class="wikitable" | {| class="wikitable" | ||

|+ | |||

!GPU | |||

!Minimum vBIOS version | |||

! | |||

! | |||

|- | |- | ||

| | |RTX PRO 5000 Blackwell | ||

| | |98.02.73.00.00 | ||

|- | |- | ||

| | |RTX PRO 6000 Blackwell Workstation Edition | ||

| | |98.02.55.00.00 | ||

|- | |- | ||

| | |RTX PRO 6000 Blackwell Max-Q | ||

|98.02.6A.00.00 | |||

|} | |||

<syntaxhighlight lang="bash"> | |||

To check the current vBIOS version: | |||

| | |||

$ nvidia-smi --query-gpu=vbios_version --format=csv | |||

</syntaxhighlight> | |||

Set GPU mode example, <syntaxhighlight lang="bash"> | |||

To set display mode to compute: | |||

sudo .∕DisplayModeSelector --gpumode=compute --gpu=<GPU_ID> | |||

To switch back to graphics mode: | |||

sudo .∕DisplayModeSelector --gpumode=graphics --gpu=<GPU_ID | |||

</syntaxhighlight> | |||

<nowiki>**</nowiki> Note | |||

On single-card workstation configs where the RTX PRO 6000 GPU serves as the primary display adapter, setting display mode to compute will disable physical display output. Ensure SSH access is available before proceeding. For systems with multiple GPUs, ensure display mode changes are not applied to the primary display adapter to preserve physical display output. | |||

== MIG supported GPUs<ref>https://docs.nvidia.com/datacenter/tesla/mig-user-guide/</ref> == | |||

{| class="wikitable" | |||

!GPU<ref>https://docs.nvidia.com/datacenter/tesla/mig-user-guide/getting-started-with-mig.html</ref> | |||

!CUDA Version | |||

!NVIDIA Driver Version | |||

|- | |- | ||

|A100 / A30 | |||

|CUDA 11 | |||

| | |R525 (>= 525.53) or later | ||

| | |||

|- | |- | ||

| | |H100 / H200 | ||

| | |CUDA 12 | ||

| | |R450 (>= 450.80.02) or later | ||

|- | |- | ||

| | |B200 | ||

| | |CUDA 12 | ||

| | |R570 (>= 570.133.20) or later | ||

|- | |- | ||

| | |RTX PRO 6000 Blackwell (All editions) | ||

| | RTX PRO 5000 Blackwell | ||

| | |CUDA 12 | ||

|R575 (>= 575.51.03) or later | |||

|} | |} | ||

== References== | == References== | ||

<references /> | <references /> | ||

Latest revision as of 14:03, 26 November 2025

NVIDIA Multi-Instance GPU

NVIDIA introduced MIG(Multi-Instance GPU) since Ampere architecture in 2020.

If we use a single GPU to run more than one application, those applications compete on using GPU resources which is known as resource contention. However, with MIG, an GPU instance is completely put aside for a specific application. MIG feature allows a single GPU into multiple fully isolated virtual GPU devices that are efficiently sized per-user-case, specifically smaller use-cases that only require a subset of GPU resources.

MIG ensures to providing each instance's processors have separate and isolated paths through the entire memory system - the on-chip crossbar ports, L2 cache banks, memory controllers, and DRAM address busses are all assigned uniquely to an individual instanceenhanced isolation GPU resources.

Benefits of MIG on MIG featured GPU are

- Physical allocation of resourdces used by parallel GPU workloads - Secure multi-tenant environments with isolation and predictable QoS

- Versatile profiles with dynamic configuration - Maximized utilization by configuring for specfic workloads

- CUDA programming model unchanged

MIG Teminology

MIG feature allows one or more GPU instances to be allocated within a GPU. so that a single GPU appear as if it were many.

GPU instalace(GI)

is a fully isolated collection of all physical GPU resources such as GPU memory, GPU SMs. it can contain one or more GPU compute instances

Compute instance(CI)

is an isolated collection of GPU SMs (CUDA cores) belongs to a single GPU instance. so that it provides partial isolation within the GPU instance for compute resourdces and independent workload scheduling.

MIG device

is made up with GPU instance and a compute instance. MIG devices are assigned GPU UUIDs and can be displayed with

$nvidia-smi -L

To use MIG feature effectivly, clock speed, MIG profile congifuration, and other settings should be optimized base on the expected MIG use-case. There is no 'best' or 'optimal' combination of profiles and configurations. however, this table shows NVIDIA recommended workload types for the different MIG sizes of the A100

GPU Slice

is the smallest fraction of the GPU that combines a single GPU memory slice and a single GPU SM slice

GPU Memory Slice

is the smallest fraction of the GPU's memory including the corresponding memory controllers and cache. generally it is roughly 1/8 of the total GPU memory resources.

GPU SM Slice

the smallest fraction of the GPU's SMs. generally it is roughly 1/7 of the total number of SMs

GPU Engine

what executes job on the GPU that scheduled independently and work in a GPU context. different engines are responsible for different actions such as compute engine or copy engine

MIG Configuration (profile)

GPU persistence mode and GPU reset required to enable or disable MIG mode, this is one-time operation per GPU and persists across system reboots.

The number of slices that a GPU can be created with is not arbitrary. The NVIDIA driver APIs provide a number of “GPU Instance Profiles” and users can create GIs by specifying one of these profiles. 18 combinations possible.

# H100 NVL, show available MIG profiles

$ nvidia-smi mig -lgip

+-----------------------------------------------------------------------------+

| GPU instance profiles: |

| GPU Name ID Instances Memory P2P SM DEC ENC |

| Free/Total GiB CE JPEG OFA |

|=============================================================================|

| 0 MIG 1g.12gb 19 7/7 10.75 No 16 1 0 |

| 1 1 0 |

+-----------------------------------------------------------------------------+

| 0 MIG 1g.12gb+me 20 1/1 10.75 No 16 1 0 |

| 1 1 1 |

+-----------------------------------------------------------------------------+

| 0 MIG 1g.24gb 15 4/4 21.62 No 26 1 0 |

| 1 1 0 |

+-----------------------------------------------------------------------------+

| 0 MIG 2g.24gb 14 3/3 21.62 No 32 2 0 |

| 2 2 0 |

+-----------------------------------------------------------------------------+

| 0 MIG 3g.47gb 9 2/2 46.38 No 60 3 0 |

| 3 3 0 |

+-----------------------------------------------------------------------------+

| 0 MIG 4g.47gb 5 1/1 46.38 No 64 4 0 |

| 4 4 0 |

+-----------------------------------------------------------------------------+

| 0 MIG 7g.94gb 0 1/1 93.12 No 132 7 0 |

| 8 7 1 |

+-----------------------------------------------------------------------------+

Enable MIG Mode

When MIG is enabled on the GPU, depending on the GPU product, the driver will attempt to reset the GPU so that MIG mode can take effect.

$ sudo nvidia-smi -pm 1 # persistence mode is required to enable MIG

Enabled Legacy persistence mode for GPU 00000000:4F:00.0.

All done.

$ sudo nvidia-smi -mig 1 # Enable MIG

Enabled MIG Mode for GPU 00000000:4F:00.0

All done.

#Verify enabled MIG mode

$ nvidia-smi --query-gpu=pci.bus_id,mig.mode.current --format=csv

pci.bus_id, mig.mode.current

00000000:4F:00.0, Enabled

If encounter a message saying “Warning: MIG mode is in pending enable state for GPU XXX:XX:XX.X:In use by another client …”, when enabling MIG. you need to stop nvsm and dcgm, then try again.[1]

$ sudo systemctl stop nvsm $ sudo systemctl stop dcgm $ sudo nvidia-smi -i 0 -mig 1

** Reference MIG user guide for various post processing of MIG configuration change to take effect.

List GPU Instance Profiles

List the possible placements available using the following command. it would be helpful to review performance of different MIG instance size using MLPerf with Single Shot MultiBox Detector (SSD) training benchmark.

The syntax of the placement is {<index>}:<GPU Slice Count> and shows the placement of the instances on the GPU

#H100 NVL, list possible placements

#The syntax of the placement output is {<index>}:<GPU Slice Count> and shows the placement of the instances on the GPU

$ nvidia-smi mig -lgipp

GPU 0 Profile ID 19 Placements: {0,1,2,3,4,5,6}:1

GPU 0 Profile ID 20 Placements: {0,1,2,3,4,5,6}:1

GPU 0 Profile ID 15 Placements: {0,2,4,6}:2

GPU 0 Profile ID 14 Placements: {0,2,4}:2

GPU 0 Profile ID 9 Placements: {0,4}:4

GPU 0 Profile ID 5 Placement : {0}:4

GPU 0 Profile ID 0 Placement : {0}:8

Creating GPU Instances

Without creating GPU instances (including corresponding compute instances), CUDA workloads cannot be run on the GPU.

Creating GPU instances using the -cgi and create the corresponding Compute Instances (CI). By using the -C option

# no GPU instances yet

$ nvidia-smi mig -lgi

No GPU instances found: Not Found

# Create 9,19,19,19 partitions

# -cgi : this flag specifies the MIG partition configuration. The numbers following the flag represent the MIG partitions for each of the four MIG device slices. In this case, there are four slices with configurations 9, 19, 19, and 19 compute instances each. These numbers correspond to the profile IDs retrieved previously

# creates the corresponding compute instances for the MIG partitions automatically

$ sudo nvidia-smi mig -cgi 9,19,19,19 -C

Successfully created GPU instance ID 2 on GPU 0 using profile MIG 3g.47gb (ID 9)

Successfully created compute instance ID 0 on GPU 0 GPU instance ID 2 using profile MIG 3g.47gb (ID 2)

Successfully created GPU instance ID 7 on GPU 0 using profile MIG 1g.12gb (ID 19)

Successfully created compute instance ID 0 on GPU 0 GPU instance ID 7 using profile MIG 1g.12gb (ID 0)

Successfully created GPU instance ID 8 on GPU 0 using profile MIG 1g.12gb (ID 19)

Successfully created compute instance ID 0 on GPU 0 GPU instance ID 8 using profile MIG 1g.12gb (ID 0)

Successfully created GPU instance ID 9 on GPU 0 using profile MIG 1g.12gb (ID 19)

Successfully created compute instance ID 0 on GPU 0 GPU instance ID 9 using profile MIG 1g.12gb (ID 0)

# List current MIG GPU instances

$ sudo nvidia-smi mig -lgi

+-------------------------------------------------------+

| GPU instances: |

| GPU Name Profile Instance Placement |

| ID ID Start:Size |

|=======================================================|

| 0 MIG 1g.12gb 19 7 0:1 |

+-------------------------------------------------------+

| 0 MIG 1g.12gb 19 8 1:1 |

+-------------------------------------------------------+

| 0 MIG 1g.12gb 19 9 2:1 |

+-------------------------------------------------------+

| 0 MIG 3g.47gb 9 2 4:4 |

+-------------------------------------------------------+

List up MIG devices

# Show GPU UUID, docker container can use the UUID

$ nvidia-smi -L

GPU 0: NVIDIA H100 NVL (UUID: GPU-d2481ba7-bc0a-5ff0-0d5b-b4ba1d4d56c5)

MIG 3g.47gb Device 0: (UUID: MIG-45174f60-85a9-51f4-bc61-f6dc57dc60e1)

MIG 1g.12gb Device 1: (UUID: MIG-b6bbb81c-71b7-54a2-a621-9b2a12215cab)

MIG 1g.12gb Device 2: (UUID: MIG-ea284e9f-1cfb-5980-8948-bc86b7c1ae71)

MIG 1g.12gb Device 3: (UUID: MIG-2bbae2fb-2ef8-5917-badf-b0571b1381cf)

# Show physical GPU and MIG devices

$ nvidia-smi

Sun May 25 22:19:13 2025

+-----------------------------------------------------------------------------------------+

| NVIDIA-SMI 570.124.04 Driver Version: 570.124.04 CUDA Version: 12.8 |

|-----------------------------------------+------------------------+----------------------+

| GPU Name Persistence-M | Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap | Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|=========================================+========================+======================|

| 0 NVIDIA H100 NVL On | 00000000:4F:00.0 Off | On |

| N/A 38C P0 58W / 400W | 87MiB / 95830MiB | N/A Default |

| | | Enabled |

+-----------------------------------------+------------------------+----------------------+

+-----------------------------------------------------------------------------------------+

| MIG devices: |

+------------------+----------------------------------+-----------+-----------------------+

| GPU GI CI MIG | Memory-Usage | Vol| Shared |

| ID ID Dev | BAR1-Usage | SM Unc| CE ENC DEC OFA JPG |

| | | ECC| |

|==================+==================================+===========+=======================|

| 0 2 0 0 | 44MiB / 47488MiB | 60 0 | 3 0 3 0 3 |

| | 0MiB / 65535MiB | | |

+------------------+----------------------------------+-----------+-----------------------+

| 0 7 0 1 | 15MiB / 11008MiB | 16 0 | 1 0 1 0 1 |

| | 0MiB / 16383MiB | | |

+------------------+----------------------------------+-----------+-----------------------+

| 0 8 0 2 | 15MiB / 11008MiB | 16 0 | 1 0 1 0 1 |

| | 0MiB / 16383MiB | | |

+------------------+----------------------------------+-----------+-----------------------+

| 0 9 0 3 | 15MiB / 11008MiB | 16 0 | 1 0 1 0 1 |

| | 0MiB / 16383MiB | | |

+------------------+----------------------------------+-----------+-----------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=========================================================================================|

| No running processes found |

+-----------------------------------------------------------------------------------------+

Use MIG device

# To use specific GPU instance, need to check device ID or MIG UUID

$ nvidia-smi -L

GPU 0: NVIDIA H100 NVL (UUID: GPU-d2481ba7-bc0a-5ff0-0d5b-b4ba1d4d56c5)

MIG 3g.47gb Device 0: (UUID: MIG-45174f60-85a9-51f4-bc61-f6dc57dc60e1)

MIG 1g.12gb Device 1: (UUID: MIG-b6bbb81c-71b7-54a2-a621-9b2a12215cab)

MIG 1g.12gb Device 2: (UUID: MIG-ea284e9f-1cfb-5980-8948-bc86b7c1ae71)

MIG 1g.12gb Device 3: (UUID: MIG-2bbae2fb-2ef8-5917-badf-b0571b1381cf)

# Above list shows, GPU ID0 has MIG device ID 0 ~ 3, in short we can access MIG GI as

# GPUID:MIG DeviceID, for example, 0:0, 0:1, 0:2 and 0:3 respectively

# Use MIG device 0:2 using UUID in docker container

$ docker run --gpus '"device=MIG-ea284e9f-1cfb-5980-8948-bc86b7c1ae71"' --rm nvidia/cuda:11.8.0-base-ubuntu20.04 nvidia-smi

Sun May 25 22:56:04 2025

+-----------------------------------------------------------------------------------------+

| NVIDIA-SMI 570.124.04 Driver Version: 570.124.04 CUDA Version: 12.8 |

|-----------------------------------------+------------------------+----------------------+

| GPU Name Persistence-M | Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap | Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|=========================================+========================+======================|

| 0 NVIDIA H100 NVL On | 00000000:4F:00.0 Off | On |

| N/A 39C P0 59W / 400W | N/A | N/A Default |

| | | Enabled |

+-----------------------------------------+------------------------+----------------------+

+-----------------------------------------------------------------------------------------+

| MIG devices: |

+------------------+----------------------------------+-----------+-----------------------+

| GPU GI CI MIG | Memory-Usage | Vol| Shared |

| ID ID Dev | BAR1-Usage | SM Unc| CE ENC DEC OFA JPG |

| | | ECC| |

|==================+==================================+===========+=======================|

| 0 8 0 0 | 15MiB / 11008MiB | 16 0 | 1 0 1 0 1 |

| | 0MiB / 16383MiB | | |

+------------------+----------------------------------+-----------+-----------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=========================================================================================|

| No running processes found |

+-----------------------------------------------------------------------------------------+

# In short, we can also use GPUID:MIG DeviceID instead of UUID

$ docker run --gpus '"device=0:1"' --rm nvidia/cuda:11.8.0-base-ubuntu20.04 nvidia-smi

Sun May 25 22:56:47 2025

+-----------------------------------------------------------------------------------------+

| NVIDIA-SMI 570.124.04 Driver Version: 570.124.04 CUDA Version: 12.8 |

|-----------------------------------------+------------------------+----------------------+

| GPU Name Persistence-M | Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap | Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|=========================================+========================+======================|

| 0 NVIDIA H100 NVL On | 00000000:4F:00.0 Off | On |

| N/A 39C P0 59W / 400W | N/A | N/A Default |

| | | Enabled |

+-----------------------------------------+------------------------+----------------------+

| MIG devices: |

+------------------+----------------------------------+-----------+-----------------------+

| GPU GI CI MIG | Memory-Usage | Vol| Shared |

| ID ID Dev | BAR1-Usage | SM Unc| CE ENC DEC OFA JPG |

| | | ECC| |

|==================+==================================+===========+=======================|

| 0 7 0 0 | 15MiB / 11008MiB | 16 0 | 1 0 1 0 1 |

| | 0MiB / 16383MiB | | |

+------------------+----------------------------------+-----------+-----------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=========================================================================================|

| No running processes found |

+-----------------------------------------------------------------------------------------+

Destroying GPU Instances

Destroy all the CIs and GIs

$ sudo nvidia-smi mig -dci && sudo nvidia-smi mig -dgi

Successfully destroyed compute instance ID 0 from GPU 0 GPU instance ID 7

Successfully destroyed compute instance ID 0 from GPU 0 GPU instance ID 8

Successfully destroyed compute instance ID 0 from GPU 0 GPU instance ID 9

Successfully destroyed compute instance ID 0 from GPU 0 GPU instance ID 2

Successfully destroyed GPU instance ID 7 from GPU 0

Successfully destroyed GPU instance ID 8 from GPU 0

Successfully destroyed GPU instance ID 9 from GPU 0

Successfully destroyed GPU instance ID 2 from GPU 0

Delete the specific CIs created under GI 1

$ sudo nvidia-smi mig -dci -ci 0,1,2 -gi 1 Successfully destroyed compute instance ID 0 from GPU 0 GPU instance ID 1 Successfully destroyed compute instance ID 1 from GPU 0 GPU instance ID 1 Successfully destroyed compute instance ID 2 from GPU 0 GPU instance ID 1

Monitoring MIG Devices

Nvidia-smi does not support utilization metrics to MIG devices. The utilization is displayed as N/A when running CUDA programs.

For monitoring MIG devices, Nvidia recommendes to use NVIDIA DCGM v2.0.13 or later.

For more information

$dcgmi dmon -e 203,204,1001,1002,1003,1004,1005,1009,1010,1011,1012,155 -i <GPU IDs>

* -e indicate the metrics we want to query. (GPU Activity (1001), Tensor Core Utilization (1004), etc)

query metrics is defined in https://github.com/NVIDIA/DCGM/blob/master/dcgmlib/dcgm_fields.h

MIG and Multi-Process Service[2]

MPS (Multi-Process Service) and MIG can work together, potentially achieving even higher levels of utilization for certain workloads.

MIG and MPS Workflow

- Configure the desired MIG geometry on the GPU.

- Setup the CUDA_MPS_PIPE_DIRECTORY variable to point to unique directories so that the multiple MPS servers and clients can communicate with each other using named pipes and Unix domain sockets.

- Launch the application by specifying the MIG device using CUDA_VISIBLE_DEVICES

MIG limitation

- Graphics contexts not supported

- P2P not available (no NVLink)

NVIDIA Display Mode [3]

The NVIDIA Display Mode Selector Tool is a utility designed to configure the ideal display mode for various specialized applications, such as CAVES, Virtual Production, Location-based Entertainment, and Universal Multi-instance GPU (MIG). It is compatible with the following GPUs:

- NVIDIA RTX PRO 6000 Blackwell Server Edition,

- NVIDIA RTX PRO 6000 Blackwell Workstation Edition,

- NVIDIA RTX PRO 6000 Blackwell Max-Q Workstation Edition,

- NVIDIA RTX PRO 5000 Blackwell,

- NVIDIA L40S,

- NVIDIA L40,

- NVIDIA RTX 6000 Ada,

- NVIDIA RTX 5000 Ada,

- NVIDIA A40,

- NVIDIA RTX A5000,

- NVIDIA RTX A5500

- NVIDIA RTX A6000 GPUs.

Additional Prerequisites for RTX PRO Blackwell GPUs

Display mode by default will be set to graphics, this must be set to compute using DisplayModeSelector (>=1.72.0) before MIG can be enabled for Workstation Edition and Max-Q Workstation Edition GPUs.[4]

Verify the GPU vBIOS version meets minimum versions listed in the following table.

| GPU | Minimum vBIOS version |

|---|---|

| RTX PRO 5000 Blackwell | 98.02.73.00.00 |

| RTX PRO 6000 Blackwell Workstation Edition | 98.02.55.00.00 |

| RTX PRO 6000 Blackwell Max-Q | 98.02.6A.00.00 |

To check the current vBIOS version:

$ nvidia-smi --query-gpu=vbios_version --format=csv

Set GPU mode example,

To set display mode to compute:

sudo .∕DisplayModeSelector --gpumode=compute --gpu=<GPU_ID>

To switch back to graphics mode:

sudo .∕DisplayModeSelector --gpumode=graphics --gpu=<GPU_ID

** Note

On single-card workstation configs where the RTX PRO 6000 GPU serves as the primary display adapter, setting display mode to compute will disable physical display output. Ensure SSH access is available before proceeding. For systems with multiple GPUs, ensure display mode changes are not applied to the primary display adapter to preserve physical display output.

MIG supported GPUs[5]

| GPU[6] | CUDA Version | NVIDIA Driver Version |

|---|---|---|

| A100 / A30 | CUDA 11 | R525 (>= 525.53) or later |

| H100 / H200 | CUDA 12 | R450 (>= 450.80.02) or later |

| B200 | CUDA 12 | R570 (>= 570.133.20) or later |

| RTX PRO 6000 Blackwell (All editions)

RTX PRO 5000 Blackwell |

CUDA 12 | R575 (>= 575.51.03) or later |

References

- ↑ https://docs.nvidia.com/datacenter/tesla/mig-user-guide/

- ↑ https://docs.nvidia.com/deploy/mps/index.html

- ↑ https://developer.nvidia.com/displaymodeselector

- ↑ https://docs.nvidia.com/datacenter/tesla/pdf/MIG_User_Guide.pdf

- ↑ https://docs.nvidia.com/datacenter/tesla/mig-user-guide/

- ↑ https://docs.nvidia.com/datacenter/tesla/mig-user-guide/getting-started-with-mig.html