Intel 4th Gen Xeon: Difference between revisions

No edit summary |

|||

| (8 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

4th Gen Intel® Xeon® Scalable Processors list [https://www.colfax-intl.com/downloads/4th-gen-xeon-acclerators-eguide-with-4th-gen-benchmarks.pdf] | Intel has launched its 4th Gen Intel Xeon scalable processors (Sapphire Rapids) as of Jan'23, 4th Gen Xeon is purpose-built to improve data center performance, efficiency, security, and capabilities for AI, cloud computing, networks, edge computing, and supercomputers. | ||

[[File:Intel 4th Gen Xeon.png|alt=Intel 4th Gen Xeon|thumb|Intel 4th Gen Xeon]] | |||

The new Xeon Models are available in six categories, including the | |||

* Max 9400, | |||

* Platinum 8000 - '''Platinum 8400''' processors are designed for advanced data analytics, AI, and hybrid cloud data centers, offering high performance, platform capabilities, and [[workload]] acceleration, as well as enhanced hardware-based security and multi-socket (Sapphire Rapids platform supports up to 8 sockets<ref>https://linustechtips.com/topic/1480633-what-is-better-amd-epyc-9004-series-or-4th-gen-intel-xeon-processors/</ref>) processing | |||

* Gold 6000/Gold 5000 - optimized for data center and multi-cloud workloads. They offer enhanced memory speed, capacity, security, and workload acceleration | |||

* Silver 4000 - Multi purpose essential performance, improved memory speed, and power efficiency for entry-level data center computing, networking, and storage. | |||

* Bronze 3000 | |||

* Max Series. | |||

[[File:Intel liquid cooling.png|alt=Intel liquid cooling|thumb|Intel liquid cooling]] | |||

The new Optimized Power Mode can provide up to 20 percent socket power savings with less than 5 percent performance impact for specific workloads. Innovations in air and [[liquid cooling]] can further reduce total data center energy consumption. Each series has a range of different models that can be drilled down to their target use case: | |||

* Performance general purpose | |||

* Mainline general purpose | |||

* Liquid cooling general purpose | |||

* Single socket general purpose (“Q” Series) | |||

* Long-life Use (IoT) general purpose (“T” Series) | |||

* IMDB/Analytics/virtualization optimized (“H” Series) | |||

* 5G/Networking optimized (“N” Series) | |||

* Cloud-optimized IaaS (“P”, “V” and “M” Series) | |||

* Storage and hyperconverged infrastructure optimized (“S” series) | |||

* HPC optimized (i.e., the Intel Xeon CPU Max series) | |||

== General Improvement == | |||

=== PCI Express Gen5 (PCIe 5.0) === | |||

4th Gen Intel Xeon Scalable processors have up to 80 lanes of [[PCIe]] 5.0—ideal for fast networking, high-bandwidth accelerators, and high-performance storage devices. PCIe 5.0 doubles the | |||

I/O bandwidth from PCIe 4.0,9 maintains backward compatibility, and provides foundational slots for CX | |||

=== DDR5 === | |||

Improve compute performance by overcoming data bottlenecks with higher memory bandwidth. DDR5 offers up to 1.5x bandwidth improvement over DDR4,10 enabling opportunities to improve performance, capacity, power efficiency, and cost. 4th Gen Intel Xeon Scalable processors offer up to 4,800 MT/s (1 DPC) or 4,400 MT/s (2 DPC) with DDR5. | |||

=== CXL === | |||

Reduce compute latency in the data center and help lower total cost of ownership (TCO) with CXL 1.1 for next-generation workloads. CXL is an alternate protocol that runs across the standard PCIe physical layer and can [[support]] both standard PCIe devices and CXL devices on the same link. CXL provides a critical capability to create a unified, coherent memory space between CPUs and accelerators, and it will revolutionize how data center server architectures will be built for years to come | |||

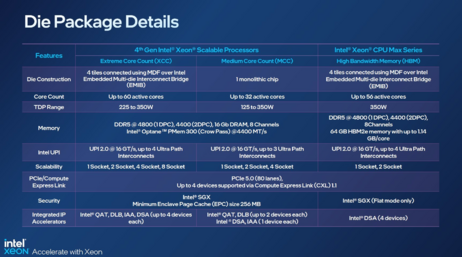

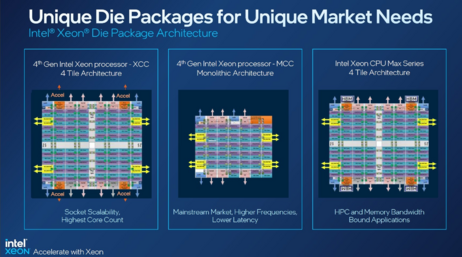

== XCC, MCC, and HBM Variants<ref>https://www.intel.com/content/www/us/en/support/articles/000094640/processors.html</ref> == | |||

One of the overlooked areas of 4th generation Xeon product is in the processor line's three main configurations. | |||

{| class="wikitable" | |||

|+ | |||

!Variants<ref>https://www.linkedin.com/pulse/4th-gen-intel-xeon-scalable-xcc-mcc-hbm-variants-secret</ref> | |||

!Carrier code<ref>https://www.intel.com/content/www/us/en/support/articles/000094640/processors.html</ref> | |||

!Description | |||

|- | |||

|XCC | |||

|E1A | |||

|packaging to tie together four compute tiles into one logical CPU. Each tile has up to 15 active cores that allow Intel to build up to 60 core CPUs | |||

|- | |||

|MCC | |||

|E1B | |||

|monolithic die has half to a quarter of the onboard acceleration capability, but the TDP range is 125W to 350W with up to 32 active cores. | |||

|- | |||

|HBM | |||

|E1C | |||

|packaging to tie gets its own 16GB HBM2e memory stack for 64GB of high-speed HBM2e memory on the CPU package. | |||

This allows for a higher-speed memory pool that can be used as a separate pool, a transparent caching tier, or even as a system's primary and only memory | |||

|} | |||

[[File:4th gen intel packages.png|left|frameless|462x462px]] | |||

[[File:4th gen intel config.png|frameless|462x462px]] | |||

== 4th Gen Intel® Xeon® Scalable Processors list [https://www.colfax-intl.com/downloads/4th-gen-xeon-acclerators-eguide-with-4th-gen-benchmarks.pdf] == | |||

{| class="wikitable" | {| class="wikitable" | ||

!SKU | !SKU | ||

| Line 977: | Line 1,041: | ||

! colspan="17" |Y Supports Intel Speed Select Technology – Performance Profile 2.0 (Intel SST-PP) | ! colspan="17" |Y Supports Intel Speed Select Technology – Performance Profile 2.0 (Intel SST-PP) | ||

|} | |} | ||

== built-in accelerators<ref>https://www.colfax-intl.com/downloads/4th-gen-xeon-acclerators-eguide-with-4th-gen-benchmarks.pdf</ref> == | |||

Integrated accelerators support today’s most demanding workloads spanning AI, security, HPC, networking, analytics and storage | |||

{| class="wikitable" | |||

|+ | |||

!Name | |||

!Purpose | |||

!Notes | |||

|- | |||

| rowspan="2" |Intel® AI Engine | |||

|Intel® Advanced Matrix Extensions (Intel® AMX) | |||

|Intel AMX is Intel’s next-generation advancement for deep-learning training and | |||

inference on 4th Gen Intel Xeon Scalable processors. Ideal for workloads like natural | |||

language processing, recommendation systems and image recognition, this new | |||

feature extends the built-in AI acceleration capabilities of previous Intel Xeon Scalable | |||

processors while also offering significant performance gains | |||

|- | |||

|Intel® Advanced Vector Extensions 512 (Intel® AVX-512 | |||

|A continuing feature of Intel Xeon Scalable processors, Intel AVX-512 is a general- | |||

purpose performance-enhancing accelerator with a wide range of uses. When it | |||

comes to AI, Intel AVX-512 can accelerate machine learning workloads for training | |||

and inferencing. 4th Gen Intel Xeon Scalable processors with Intel AVX-512 are also | |||

designed to speed up data preprocessin | |||

|- | |||

| rowspan="3" |Intel® Security Engines | |||

|Intel® Software Guard Extensions (Intel® SGX) | |||

|Intel SGX is the most researched, updated and deployed confidential computing | |||

technology in data centers on the market today. This continuing feature of Intel Xeon | |||

Scalable processors provides the foundation for confidential computing solutions | |||

across edge and multi-cloud infrastructures. | |||

Intel SGX offers a hardware-based security solution that is designed to prohibit | |||

access to protected data in use via unique application-isolation technology. By | |||

helping protect selected code and data from inspection or modification, Intel SGX | |||

allows developers to run sensitive data operations inside enclaves to help increase | |||

application security and protect data confidentiality | |||

|- | |||

|Intel® Trust Domain Extensions (Intel® TDX) | |||

|Intel TDX is a new capability available through select cloud providers in 2023 that | |||

offers increased confidentiality at the virtual machine (VM) level, enhancing [[privacy]] | |||

and control over your data. Within an Intel TDX confidential VM, the guest OS and | |||

VM applications are isolated from access by the cloud host, hypervisor and other | |||

VMs on the platform. | |||

|- | |||

|Intel® Crypto Acceleration | |||

|Intel Crypto Acceleration uses single instruction, multiple data (SIMD) techniques to | |||

process more encryption operations in every clock cycle. This can help increase the | |||

total throughput of applications that require strong data encryption, with minimal | |||

impact on performance and user experience | |||

|- | |||

| rowspan="3" |Intel® HPC Engines | |||

|Intel® Advanced Vector Extensions 512 (Intel® AVX-512) | |||

|With ultrawide 512-bit vector operations capabilities, Intel AVX-512 is especially | |||

suited to handle the most demanding computational tasks commonly encountered in | |||

HPC applications. It’s used by organizations across educational, financial, enterprise, | |||

engineering and medical industries. By enabling users to run complex workloads | |||

on existing hardware, Intel AVX-512 accelerates performance for tasks like financial | |||

analytics, 3D modeling and scientific simulations | |||

|- | |||

|Intel® Advanced Matrix Extensions (Intel® AMX) | |||

|In addition to accelerating AI workloads, Intel AMX is also designed to deliver | |||

performance gains across popular HPC workloads. This new built-in accelerator | |||

transforms large matrix math calculations into a single operation and uses a two- | |||

dimensional register file to store large chunks of data | |||

|- | |||

|Intel® Data Streaming Accelerator (Intel® DSA | |||

|Intel DSA is a new feature designed to optimize and speed up streaming data | |||

movement and transformation operations common in networking, data- | |||

processing-intensive applications and high-performance storage. Intel DSA | |||

accelerates HPC workloads by offloading the most common data movement tasks | |||

that cause CPU overhead in data-center-scale deployments | |||

|- | |||

| rowspan="3" |Intel® Network Engines | |||

|Intel® QuickAssist Technology (Intel® QAT) | |||

|Intel QAT boosts performance to meet the demands of today’s networking workloads, | |||

helping systems serve more clients. It can deliver significant workload acceleration for | |||

cryptography, including symmetric and asymmetric encryption and decryption. | |||

Intel QAT using RSA4K can increase client density on an open-source NGINX web | |||

server compared to software running on CPU cores without acceleration | |||

|- | |||

|Intel® vRAN Boost | |||

|Intel vRAN Boost is a new feature that eliminates the need for an external accelerator | |||

card by integrating vRAN acceleration directly into the Intel 4th Gen Xeon Scalable | |||

processor. By reducing vRAN component requirements, it reduces overall vRAN | |||

solution complexity and provides power savings for customers | |||

|- | |||

|Intel® Dynamic Load Balancer (Intel® DLB | |||

|Intel DLB is a new feature that enables the efficient distribution of network | |||

processing across multiple CPU cores. It also restores the order of networking data | |||

packets processed simultaneously on CPU cores. | |||

With Intel DLB, customers can gain higher performance on packet forwarding | |||

compared to software queue management on cores without acceleration. | |||

Additionally, applications can achieve higher packet processing performance than | |||

the previous generation | |||

|- | |||

| rowspan="3" |Intel® Analytics Engines | |||

|Intel® In-Memory Analytics Accelerator (Intel® IAA) | |||

|Intel IAA is designed to accelerate database and analytics performance. By increasing | |||

query throughput and decreasing the memory footprint for in-memory databases | |||

and advanced analytics workloads, this new feature can provide faster data | |||

movement and improve CPU core utilization by reducing dependency on CPU cores. | |||

Intel IAA is ideal for in-memory databases, open-source databases and data stores | |||

like RocksDB, Redis, Cassandra and MySQL. Customers using 4th Gen Intel Xeon | |||

Platinum 8490H with Intel IAA will gain up to 3x higher RocksDB performance | |||

compared to the previous generation | |||

|- | |||

|Intel® Data Streaming Accelerator (Intel® DSA) | |||

|Intel DSA is a new feature designed to optimize and speed up streaming data | |||

movement and transformation operations common in data-intensive applications, | |||

driving better business outcomes. By offloading tasks like data movement, data | |||

copying and error checking, Intel DSA enables the CPU to focus on business- | |||

critical database functions or other analytics workloads. This reduces query | |||

latencies and increases throughput, delivering faster data processing | |||

|- | |||

|Intel® QuickAssist Technology (Intel® QAT) | |||

|Intel QAT can be used to accelerate database backups. When QAT was enabled | |||

as a new feature in SQL Server 2022, it delivered more efficient and higher | |||

performance applications. | |||

With Intel QAT, SQL Server customers achieve up to 2.3x faster backup operations | |||

and a 6% reduction in backup storage capacity | |||

|- | |||

| rowspan="3" |Intel® Storage Engines | |||

|Intel® Data Streaming Accelerator (Intel® DSA) | |||

|Intel DSA works on the CPU — between DRAM, caches and processor cores — and | |||

extends across I/Os to attached memory and storage, plus networked resources. | |||

As Intel’s next-generation direct memory access (DMA) engine, it speeds transfers | |||

between volatile and persistent memory and supports virtualized memory and I/Os. | |||

Customers using 4th Gen Intel Xeon Platinum 8490H with Intel DSA will gain up to | |||

1.6x higher IOPS and up to 37% latency reduction for large packet sequential read | |||

compared to the previous generation | |||

|- | |||

|Intel® QuickAssist Technology (Intel® QAT) | |||

|Intel QAT increases performance of storage workloads and applications by | |||

accelerating cryptography and data compression/decompression. Customers | |||

using 4th Gen Intel Xeon Platinum 8490H with Intel QAT will gain up to 95% | |||

fewer cores and 2x higher level 1 compression throughput compared to the | |||

previous generation | |||

|- | |||

|Intel® Volume Management Device (Intel® VMD) | |||

|Intel VMD is a legacy feature that enables direct control and management of | |||

NVMe SSDs from the PCIe bus without the need for additional hardware adapters. | |||

It allows for a smoother and lower-cost transition to fast NVMe storage while | |||

limiting the downtime of critical infrastructure. With benefits like bootable RAID, | |||

robust surprise hot-plug and blink status LED, Intel VMD increases serviceability | |||

and enables you to deploy next-generation storage with confidence | |||

|} | |||

== Reference == | |||

<references /> | |||

Latest revision as of 10:09, 5 December 2023

Intel has launched its 4th Gen Intel Xeon scalable processors (Sapphire Rapids) as of Jan'23, 4th Gen Xeon is purpose-built to improve data center performance, efficiency, security, and capabilities for AI, cloud computing, networks, edge computing, and supercomputers.

The new Xeon Models are available in six categories, including the

- Max 9400,

- Platinum 8000 - Platinum 8400 processors are designed for advanced data analytics, AI, and hybrid cloud data centers, offering high performance, platform capabilities, and workload acceleration, as well as enhanced hardware-based security and multi-socket (Sapphire Rapids platform supports up to 8 sockets[1]) processing

- Gold 6000/Gold 5000 - optimized for data center and multi-cloud workloads. They offer enhanced memory speed, capacity, security, and workload acceleration

- Silver 4000 - Multi purpose essential performance, improved memory speed, and power efficiency for entry-level data center computing, networking, and storage.

- Bronze 3000

- Max Series.

The new Optimized Power Mode can provide up to 20 percent socket power savings with less than 5 percent performance impact for specific workloads. Innovations in air and liquid cooling can further reduce total data center energy consumption. Each series has a range of different models that can be drilled down to their target use case:

- Performance general purpose

- Mainline general purpose

- Liquid cooling general purpose

- Single socket general purpose (“Q” Series)

- Long-life Use (IoT) general purpose (“T” Series)

- IMDB/Analytics/virtualization optimized (“H” Series)

- 5G/Networking optimized (“N” Series)

- Cloud-optimized IaaS (“P”, “V” and “M” Series)

- Storage and hyperconverged infrastructure optimized (“S” series)

- HPC optimized (i.e., the Intel Xeon CPU Max series)

General Improvement

PCI Express Gen5 (PCIe 5.0)

4th Gen Intel Xeon Scalable processors have up to 80 lanes of PCIe 5.0—ideal for fast networking, high-bandwidth accelerators, and high-performance storage devices. PCIe 5.0 doubles the

I/O bandwidth from PCIe 4.0,9 maintains backward compatibility, and provides foundational slots for CX

DDR5

Improve compute performance by overcoming data bottlenecks with higher memory bandwidth. DDR5 offers up to 1.5x bandwidth improvement over DDR4,10 enabling opportunities to improve performance, capacity, power efficiency, and cost. 4th Gen Intel Xeon Scalable processors offer up to 4,800 MT/s (1 DPC) or 4,400 MT/s (2 DPC) with DDR5.

CXL

Reduce compute latency in the data center and help lower total cost of ownership (TCO) with CXL 1.1 for next-generation workloads. CXL is an alternate protocol that runs across the standard PCIe physical layer and can support both standard PCIe devices and CXL devices on the same link. CXL provides a critical capability to create a unified, coherent memory space between CPUs and accelerators, and it will revolutionize how data center server architectures will be built for years to come

XCC, MCC, and HBM Variants[2]

One of the overlooked areas of 4th generation Xeon product is in the processor line's three main configurations.

| Variants[3] | Carrier code[4] | Description |

|---|---|---|

| XCC | E1A | packaging to tie together four compute tiles into one logical CPU. Each tile has up to 15 active cores that allow Intel to build up to 60 core CPUs |

| MCC | E1B | monolithic die has half to a quarter of the onboard acceleration capability, but the TDP range is 125W to 350W with up to 32 active cores. |

| HBM | E1C | packaging to tie gets its own 16GB HBM2e memory stack for 64GB of high-speed HBM2e memory on the CPU package.

This allows for a higher-speed memory pool that can be used as a separate pool, a transparent caching tier, or even as a system's primary and only memory |

4th Gen Intel® Xeon® Scalable Processors list [1]

| SKU | CORES | BASE | ALL CORE TURBO (GHz) | Max TURBO (GHz) | CACHE (MB) | TDP (Watts) | Maximum Scalability | DDR5 Memory Speed | UPI Links Enabled | Default DSA Devices | Default IAA Devices | Default QAT Devices | Default DLB Devices | Intel SGX Enclave Capacity (Per Processor) | Long Life Use | Intel® On Demand Capable |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 2S PERFORMANCE GENERAL PURPOSE | ||||||||||||||||

| 8480+ | 56 | 2.0 | 3.0 | 3.8 | 105 | 350 | 2S | 4800 | 4 | 1 | 1 | 1 | 1 | 512GB | ✓ | |

| 8470 | 52 | 2.0 | 3.0 | 3.8 | 105 | 350 | 2S | 4800 | 4 | 1 | 0 | 0 | 0 | 512GB | ✓ | ✓ |

| 8468 | 48 | 2.1 | 3.1 | 3.8 | 105 | 350 | 2S | 4800 | 4 | 1 | 0 | 0 | 0 | 512GB | ✓ | |

| 8460Y+ | 40 | 2.0 | 2.8 | 3.7 | 105 | 300 | 2S | 4800 | 4 | 1 | 1 | 1 | 1 | 128GB | ✓ | |

| 8462Y+ | 32 | 2.8 | 3.6 | 4.1 | 60 | 300 | 2S | 4800 | 3 | 1 | 1 | 1 | 1 | 128GB | ✓ | ✓ |

| 6448Y | 32 | 2.1 | 3.0 | 4.1 | 60 | 225 | 2S | 4800 | 3 | 1 | 0 | 0 | 0 | 128GB | ✓ | ✓ |

| 6442Y | 24 | 2.6 | 3.3 | 4.0 | 60 | 225 | 2S | 4800 | 3 | 1 | 0 | 0 | 0 | 128GB | ✓ | |

| 6444Y | 16 | 3.6 | 4.0 | 4.1 | 45 | 270 | 2S | 4800 | 3 | 1 | 0 | 0 | 0 | 128GB | ✓ | |

| 6426Y | 16 | 2.5 | 3.3 | 4.1 | 37.5 | 185 | 2S | 4800 | 3 | 1 | 0 | 0 | 0 | 128GB | ✓ | ✓ |

| 6434 | 8 | 3.7 | 4.1 | 4.1 | 22.5 | 195 | 2S | 4800 | 3 | 1 | 0 | 0 | 0 | 128GB | ✓ | |

| 5415+ | 8 | 2.9 | 3.6 | 4.1 | 22.5 | 150 | 2S | 4400 | 3 | 1 | 1 | 1 | 1 | 128GB | ✓ | ✓ |

| 2S MAINLINE GENERAL PURPOSE | ||||||||||||||||

| 8452Y | 36 | 2.0 | 2.8 | 3.2 | 67.5 | 300 | 2S | 4800 | 4 | 1 | 0 | 0 | 0 | 128GB | ✓ | ✓ |

| 6438Y+ | 32 | 2.0 | 2.8 | 4.0 | 60 | 205 | 2S | 4800 | 3 | 1 | 1 | 1 | 1 | 128GB | ✓ | |

| 6430 | 32 | 2.1 | 2.6 | 3.4 | 60 | 270 | 2S | 4400 | 3 | 1 | 0 | 0 | 0 | 128GB | ✓ | ✓ |

| 5420+ | 28 | 2.0 | 2.7 | 4.1 | 52.5 | 205 | 2S | 4400 | 3 | 1 | 1 | 1 | 1 | 128GB | ✓ | ✓ |

| 5418Y | 24 | 2.0 | 2.8 | 3.8 | 45 | 185 | 2S | 4400 | 3 | 1 | 0 | 0 | 0 | 128GB | ✓ | ✓ |

| 4416+ | 20 | 2.0 | 2.9 | 3.9 | 37.5 | 165 | 2S | 4000 | 2 | 1 | 1 | 1 | 1 | 64GB | ✓ | ✓ |

| 4410Y | 12 | 2.0 | 2.8 | 3.9 | 30 | 150 | 2S | 4000 | 2 | 1 | 0 | 0 | 0 | 64GB | ✓ | ✓ |

| LIQUID COOLED GENERAL PURPOSE(-Q) | ||||||||||||||||

| 8470Q | 52 | 2.1 | 3.2 | 3.8 | 105 | 350 | 2S | 4800 | 4 | 1 | 0 | 0 | 0 | 512GB | ✓ | |

| 6458Q | 32 | 3.1 | 4.0 | 4.0 | 60 | 350 | 2S | 4800 | 3 | 1 | 0 | 0 | 0 | 128GB | ✓ | |

| SINGLE SOCKET GENERAL PURPOSE(-U) | ||||||||||||||||

| 6414U | 32 | 2.0 | 2.6 | 3.4 | 60 | 350 | 1S | 4800 | 0 | 1 | 0 | 0 | 0 | 128GB | ✓ | |

| 5412U | 24 | 2.1 | 2.9 | 3.9 | 45 | 185 | 1S | 4400 | 0 | 1 | 0 | 0 | 0 | 128GB | ✓ | |

| 3408U | 8 | 1.8 | 1.9 | 1.9 | 22.5 | 125 | 1S | 4000 | 0 | 1 | 0 | 0 | 0 | 64GB | ✓ | |

| LONG - LIFE USE (IOT) GENERAL PURPOSE (-T) | ||||||||||||||||

| 4410T | 10 | 2.7 | 3.4 | 4.0 | 26.25 | 150 | 2S | 4000 | 2 | 1 | 0 | 0 | 0 | 64GB | ✓ | ✓ |

| IMDB/ANALYTICS/VIRTUALIZATION OPTIMIZED (-H) – SOCKET SCALABLE | ||||||||||||||||

| 8490H | 60 | 1.9 | 2.9 | 3.5 | 112.5 | 350 | 8S | 4800 | 4 | 4 | 4 | 4 | 4 | 512GB | ||

| 8468H | 48 | 2.1 | 3.0 | 3.8 | 105 | 330 | 8S | 4800 | 4 | 4 | 4 | 4 | 4 | 512GB | ||

| 8460H | 40 | 2.2 | 3.1 | 3.8 | 105 | 330 | 8S | 4800 | 4 | 4 | 4 | 0 | 0 | 512GB | ||

| 8454H | 32 | 2.1 | 2.7 | 3.4 | 82.5 | 270 | 8S | 4800 | 4 | 4 | 4 | 4 | 4 | 512GB | ||

| 8450H | 28 | 2.0 | 2.6 | 3.5 | 75 | 250 | 8S | 4800 | 4 | 4 | 4 | 0 | 0 | 512GB | ||

| 8444H | 16 | 2.9 | 3.2 | 4.0 | 45 | 270 | 8S | 4800 | 4 | 4 | 4 | 0 | 0 | 512GB | ||

| 6448H | 32 | 2.4 | 3.2 | 4.1 | 60 | 250 | 4S | 4800 | 3 | 1 | 1 | 2 | 2 | 512GB | ||

| 6418H | 24 | 2.1 | 2.9 | 4.0 | 60 | 185 | 4S | 4800 | 3 | 1 | 1 | 0 | 0 | 512GB | ✓ | |

| 6416H | 18 | 2.2 | 2.9 | 4.2 | 45 | 165 | 4S | 4800 | 3 | 1 | 1 | 0 | 0 | 512GB | ||

| 6434H | 8 | 3.7 | 4.1 | 4.1 | 22.5 | 195 | 4S | 4800 | 3 | 1 | 1 | 0 | 0 | 512GB | ||

| 5G / NETWORKING OPTIMIZED (-N) | ||||||||||||||||

| 8470N | 52 | 1.7 | 2.7 | 3.6 | 97.5 | 300 | 2S | 4800 | 4 | 4 | 0 | 4 | 4 | 128GB | ✓ | ✓ |

| 8471N | 52 | 1.8 | 2.8 | 3.6 | 97.5 | 300 | 1S | 4800 | 0 | 4 | 0 | 4 | 4 | 128GB | ✓ | ✓ |

| 6438N | 32 | 2.0 | 2.7 | 3.6 | 60 | 205 | 2S | 4800 | 3 | 1 | 0 | 2 | 2 | 128GB | ✓ | ✓ |

| 6428N | 32 | 1.8 | 2.5 | 3.8 | 60 | 185 | 2S | 4000 | 3 | 1 | 0 | 2 | 2 | 128GB | ✓ | ✓ |

| 6421N | 32 | 1.8 | 2.6 | 3.6 | 60 | 185 | 1S | 4400 | 0 | 1 | 0 | 0 | 0 | 128GB | ✓ | ✓ |

| 5418N | 24 | 1.8 | 2.6 | 3.8 | 45 | 165 | 2S | 4000 | 3 | 1 | 0 | 2 | 2 | 128GB | ✓ | ✓ |

| 5411N | 24 | 1.9 | 2.8 | 3.9 | 45 | 165 | 1S | 4400 | 0 | 1 | 0 | 2 | 2 | 128GB | ✓ | ✓ |

| CLOUD OPTIMIZED IaaS (-P) / SaaS(-V) / Media(-M) | ||||||||||||||||

| 8468V | 48 | 2.4 | 2.9 | 3.8 | 97.5 | 330 | 2S | 4800 | 3 | 1 | 1 | 1 | 1 | 128GB | ✓ | |

| 8458P | 44 | 2.7 | 3.2 | 3.8 | 82.5 | 350 | 2S | 4800 | 3 | 1 | 1 | 1 | 1 | 512GB | ✓ | |

| 8461V | 48 | 2.2 | 2.8 | 3.7 | 97.5 | 300 | 1S | 4800 | 0 | 1 | 1 | 1 | 1 | 128GB | ✓ | |

| 6438M | 32 | 2.2 | 2.8 | 3.9 | 60 | 205 | 2S | 4800 | 3 | 1 | 1 | 0 | 0 | 128GB | ✓ | |

| STORAGE & HYPERCONVERGED INFRASTRUCTURE (HCI) OPTIMIZED (-S) | ||||||||||||||||

| 6454S | 32 | 2.2 | 2.8 | 3.4 | 60 | 270 | 2S | 4800 | 4 | 4 | 0 | 4 | 4 | 128GB | ✓ | |

| 5416S | 16 | 2.0 | 2.8 | 4.0 | 30 | 150 | 2S | 4400 | 3 | 1 | 0 | 2 | 2 | 128GB | ✓ | |

| HPC OPTMIZED (Intel® Xeon® CPU MAX Series) | ||||||||||||||||

| 9480 | 56 | 1.9 | 2.6 | 3.5 | 112.5 | 350 | 2S | 4800 | 4 | 4 | 0 | 0 | 0 | 128GB | ||

| 9470 | 52 | 2 | 2.7 | 3.5 | 105 | 350 | 2S | 4800 | 4 | 4 | 0 | 0 | 0 | 512GB | ||

| 9468 | 48 | 2.1 | 2.6 | 3.5 | 105 | 350 | 2S | 4800 | 4 | 4 | 0 | 0 | 0 | 128GB | ||

| 9460 | 40 | 2.2 | 2.7 | 3.5 | 97.5 | 350 | 2S | 4800 | 3 | 4 | 0 | 0 | 0 | 128GB | ||

| 9462 | 32 | 2.7 | 3.1 | 3.5 | 75 | 350 | 2S | 4800 | 3 | 4 | 0 | 0 | 0 | 128GB | ||

| Y Supports Intel Speed Select Technology – Performance Profile 2.0 (Intel SST-PP) | ||||||||||||||||

built-in accelerators[5]

Integrated accelerators support today’s most demanding workloads spanning AI, security, HPC, networking, analytics and storage

| Name | Purpose | Notes |

|---|---|---|

| Intel® AI Engine | Intel® Advanced Matrix Extensions (Intel® AMX) | Intel AMX is Intel’s next-generation advancement for deep-learning training and

inference on 4th Gen Intel Xeon Scalable processors. Ideal for workloads like natural language processing, recommendation systems and image recognition, this new feature extends the built-in AI acceleration capabilities of previous Intel Xeon Scalable processors while also offering significant performance gains |

| Intel® Advanced Vector Extensions 512 (Intel® AVX-512 | A continuing feature of Intel Xeon Scalable processors, Intel AVX-512 is a general-

purpose performance-enhancing accelerator with a wide range of uses. When it comes to AI, Intel AVX-512 can accelerate machine learning workloads for training and inferencing. 4th Gen Intel Xeon Scalable processors with Intel AVX-512 are also designed to speed up data preprocessin | |

| Intel® Security Engines | Intel® Software Guard Extensions (Intel® SGX)

|

Intel SGX is the most researched, updated and deployed confidential computing

technology in data centers on the market today. This continuing feature of Intel Xeon Scalable processors provides the foundation for confidential computing solutions across edge and multi-cloud infrastructures. Intel SGX offers a hardware-based security solution that is designed to prohibit access to protected data in use via unique application-isolation technology. By helping protect selected code and data from inspection or modification, Intel SGX allows developers to run sensitive data operations inside enclaves to help increase application security and protect data confidentiality |

| Intel® Trust Domain Extensions (Intel® TDX) | Intel TDX is a new capability available through select cloud providers in 2023 that

offers increased confidentiality at the virtual machine (VM) level, enhancing privacy and control over your data. Within an Intel TDX confidential VM, the guest OS and VM applications are isolated from access by the cloud host, hypervisor and other VMs on the platform. | |

| Intel® Crypto Acceleration | Intel Crypto Acceleration uses single instruction, multiple data (SIMD) techniques to

process more encryption operations in every clock cycle. This can help increase the total throughput of applications that require strong data encryption, with minimal impact on performance and user experience | |

| Intel® HPC Engines | Intel® Advanced Vector Extensions 512 (Intel® AVX-512)

|

With ultrawide 512-bit vector operations capabilities, Intel AVX-512 is especially

suited to handle the most demanding computational tasks commonly encountered in HPC applications. It’s used by organizations across educational, financial, enterprise, engineering and medical industries. By enabling users to run complex workloads on existing hardware, Intel AVX-512 accelerates performance for tasks like financial analytics, 3D modeling and scientific simulations |

| Intel® Advanced Matrix Extensions (Intel® AMX) | In addition to accelerating AI workloads, Intel AMX is also designed to deliver

performance gains across popular HPC workloads. This new built-in accelerator transforms large matrix math calculations into a single operation and uses a two- dimensional register file to store large chunks of data | |

| Intel® Data Streaming Accelerator (Intel® DSA | Intel DSA is a new feature designed to optimize and speed up streaming data

movement and transformation operations common in networking, data- processing-intensive applications and high-performance storage. Intel DSA accelerates HPC workloads by offloading the most common data movement tasks that cause CPU overhead in data-center-scale deployments | |

| Intel® Network Engines | Intel® QuickAssist Technology (Intel® QAT)

|

Intel QAT boosts performance to meet the demands of today’s networking workloads,

helping systems serve more clients. It can deliver significant workload acceleration for cryptography, including symmetric and asymmetric encryption and decryption. Intel QAT using RSA4K can increase client density on an open-source NGINX web server compared to software running on CPU cores without acceleration |

| Intel® vRAN Boost | Intel vRAN Boost is a new feature that eliminates the need for an external accelerator

card by integrating vRAN acceleration directly into the Intel 4th Gen Xeon Scalable processor. By reducing vRAN component requirements, it reduces overall vRAN solution complexity and provides power savings for customers | |

| Intel® Dynamic Load Balancer (Intel® DLB | Intel DLB is a new feature that enables the efficient distribution of network

processing across multiple CPU cores. It also restores the order of networking data packets processed simultaneously on CPU cores. With Intel DLB, customers can gain higher performance on packet forwarding compared to software queue management on cores without acceleration. Additionally, applications can achieve higher packet processing performance than the previous generation | |

| Intel® Analytics Engines | Intel® In-Memory Analytics Accelerator (Intel® IAA)

|

Intel IAA is designed to accelerate database and analytics performance. By increasing

query throughput and decreasing the memory footprint for in-memory databases and advanced analytics workloads, this new feature can provide faster data movement and improve CPU core utilization by reducing dependency on CPU cores. Intel IAA is ideal for in-memory databases, open-source databases and data stores like RocksDB, Redis, Cassandra and MySQL. Customers using 4th Gen Intel Xeon Platinum 8490H with Intel IAA will gain up to 3x higher RocksDB performance compared to the previous generation |

| Intel® Data Streaming Accelerator (Intel® DSA) | Intel DSA is a new feature designed to optimize and speed up streaming data

movement and transformation operations common in data-intensive applications, driving better business outcomes. By offloading tasks like data movement, data copying and error checking, Intel DSA enables the CPU to focus on business- critical database functions or other analytics workloads. This reduces query latencies and increases throughput, delivering faster data processing | |

| Intel® QuickAssist Technology (Intel® QAT) | Intel QAT can be used to accelerate database backups. When QAT was enabled

as a new feature in SQL Server 2022, it delivered more efficient and higher performance applications. With Intel QAT, SQL Server customers achieve up to 2.3x faster backup operations and a 6% reduction in backup storage capacity | |

| Intel® Storage Engines | Intel® Data Streaming Accelerator (Intel® DSA)

|

Intel DSA works on the CPU — between DRAM, caches and processor cores — and

extends across I/Os to attached memory and storage, plus networked resources. As Intel’s next-generation direct memory access (DMA) engine, it speeds transfers between volatile and persistent memory and supports virtualized memory and I/Os. Customers using 4th Gen Intel Xeon Platinum 8490H with Intel DSA will gain up to 1.6x higher IOPS and up to 37% latency reduction for large packet sequential read compared to the previous generation |

| Intel® QuickAssist Technology (Intel® QAT) | Intel QAT increases performance of storage workloads and applications by

accelerating cryptography and data compression/decompression. Customers using 4th Gen Intel Xeon Platinum 8490H with Intel QAT will gain up to 95% fewer cores and 2x higher level 1 compression throughput compared to the previous generation | |

| Intel® Volume Management Device (Intel® VMD) | Intel VMD is a legacy feature that enables direct control and management of

NVMe SSDs from the PCIe bus without the need for additional hardware adapters. It allows for a smoother and lower-cost transition to fast NVMe storage while limiting the downtime of critical infrastructure. With benefits like bootable RAID, robust surprise hot-plug and blink status LED, Intel VMD increases serviceability and enables you to deploy next-generation storage with confidence |

Reference

- ↑ https://linustechtips.com/topic/1480633-what-is-better-amd-epyc-9004-series-or-4th-gen-intel-xeon-processors/

- ↑ https://www.intel.com/content/www/us/en/support/articles/000094640/processors.html

- ↑ https://www.linkedin.com/pulse/4th-gen-intel-xeon-scalable-xcc-mcc-hbm-variants-secret

- ↑ https://www.intel.com/content/www/us/en/support/articles/000094640/processors.html

- ↑ https://www.colfax-intl.com/downloads/4th-gen-xeon-acclerators-eguide-with-4th-gen-benchmarks.pdf