RDMA: Difference between revisions

No edit summary |

|||

| Line 9: | Line 9: | ||

# Infiniband is a network protocol tailored specifically for RDMA, which can ensure the reliability of data transmission from the hardware level. Although InfiniBand technical specifications and standard specifications were officially published in 2000, InfiniBand Architecture (IBA) has been widely used on cluster supercomputers after 2005. The biggest reason for the slow development is that Infiniband requires its own dedicated hardware from L2 to L4. The cost of the enterprise is very high. | # Infiniband is a network protocol tailored specifically for RDMA, which can ensure the reliability of data transmission from the hardware level. Although InfiniBand technical specifications and standard specifications were officially published in 2000, InfiniBand Architecture (IBA) has been widely used on cluster supercomputers after 2005. The biggest reason for the slow development is that Infiniband requires its own dedicated hardware from L2 to L4. The cost of the enterprise is very high. | ||

# RoCE (RDMA over Converged Ethernet) is a | # RoCE (RDMA over Converged Ethernet) is a protocol for implemtenting RDMA in an Ethernet network for a cheaper RDMA option. RoCE primarily provides RDMA capabilities over Ethernet. | ||

# iWARP is a | ## RoCEv1, which runs at the link layer and cannot be run over a routed network. Therefore, it requires the priority flow control (PFC) to be enabled. The RoCE v1 protocol is an Ethernet link layer protocol allowing two hosts in the same Ethernet broadcast domain (VLAN) to communicate. It uses Ethertype 0x8915, which limits the frame length to 1500 bytes for a standard Ethernet frame and 9000 bytes for an Ethernet jumbo frame. | ||

## RoCEv2, which runs over layer 3. Because it is a routed solution, consider using explicit congestion notification (ECN) with RoCEv2 as ECN bits are communicated end-to-end across a routed network. By changing the packet encapsulation to include IP and UDP headers, RoCE v2 can now be used across both L2 and L3 networks. This enables Layer 3 routing, which brings RDMA to network with multiple subnets for great scalability. Therefore, RoCE v2 is also regarded as Routable RoCE (RRoCE). Owing to the arrival of RoCE v2, the IP multicast is now also possible. | |||

# iWARP iWARP is a protocol for implementing RDMA across Internet Protocol (IP) networks. Its advantage is that it can run in today's standard TCP/IP network. RDMA can be used only by purchasing a network card that supports iWARP. For those with a slightly lower financial budget It is especially suitable for enterprises. But its disadvantage is that it is slightly worse than RoCE in performance. After all, you get what you pay for. | |||

== The Difference Between RDMA and TCP/IP<ref>https://community.fs.com/article/roce-vs-infiniband-vs-tcp-ip.html</ref><ref>https://community.fs.com/article/roce-rdma-over-converged-ethernet.html</ref> == | |||

{| class="wikitable" | |||

!Contents | |||

!RoCE | |||

!Infiniband | |||

!iWARP | |||

!TCP/IP | |||

|- | |||

|High scalability | |||

|Good | |||

|Excellent | |||

|Wore than InfiniBand and RoCE | |||

|Poor | |||

|- | |||

|High performance | |||

|Equivalent to InfiniBand | |||

|Excellent | |||

|Slightly worse than InfiniBand (affected by TCP) | |||

|Poor | |||

|- | |||

|High stability | |||

|Good | |||

|Excellent | |||

|Poor | |||

|Excellent | |||

|- | |||

|Easy management | |||

|Hard | |||

|Hard | |||

|Harder than RoCE | |||

|Easy | |||

|- | |||

|Cost-efficiency | |||

|Low | |||

|High | |||

|Medium | |||

|Low | |||

|- | |||

|Network device | |||

|Network device | |||

|IB switch | |||

|Network device | |||

|Network device | |||

|} | |||

[[File:InfiniBand Vs. RoCEv2.png|center|frameless|808x808px|InfiniBand Vs. RoCEv2]] | |||

== RDMA service == | |||

RDMA service in [[Linux]] is a technology that allows applications to communicate directly with each other over a network without involving the operating system. It provides high-speed, low-latency data transfer between applications using a low-overhead protocol. This protocol is optimized for a variety of network configurations and can be used for remote direct memory access (RDMA) operations, such as streaming data or memory-mapped files. RDMA service in Linux is designed to reduce the overhead associated with networking and can provide improved performance and scalability. In addition, it can be used to offload certain networking tasks, such as '''packet processing''' and network I/O, improving scalability and freeing up the system’s resources for other tasks. | |||

To run RDMA, you must use pinned memory, i.e. memory that cannot be swapped between kernel components. It is recommended that every process that is not a root user run at low memory consumption (64KB). The amount of memory that can be locked must be increased in order for RDMA to function properly.<ref>https://www.systranbox.com/checking-for-rdma-capability-on-linux-how-to-determine-if-your-nic-is-rdma-ready/</ref> | |||

== RDMA supported NIC == | |||

To check if your NIC supports RDMA, you can use the lspci command in the Linux terminal, which will list all the PCI devices connected to your system. Additionally, you can look up your NIC’s specifications online to see if it supports RDMA. | |||

== GPUDirect RDMA == | == GPUDirect RDMA == | ||

Latest revision as of 09:52, 3 January 2024

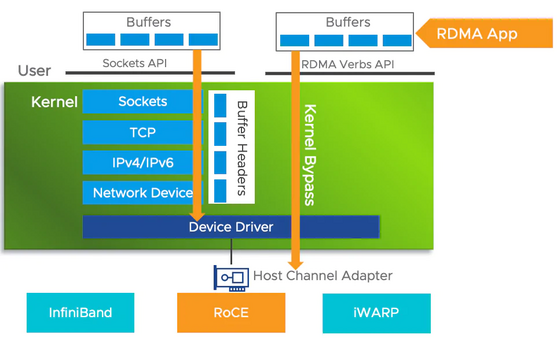

RDMA (Remote Direct Memory Access), which means remote direct memory access, is a network communication protocol that was first applied in the field of high-performance computing, and has gradually become popular in data centers.

RDMA allows user programs to bypass the operating system kernel (CPU) and directly interact with the network card for network communication, thereby providing high bandwidth and extremely small latency.[1]

Types of RDMA

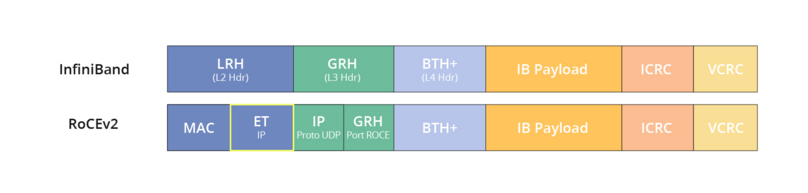

- Infiniband is a network protocol tailored specifically for RDMA, which can ensure the reliability of data transmission from the hardware level. Although InfiniBand technical specifications and standard specifications were officially published in 2000, InfiniBand Architecture (IBA) has been widely used on cluster supercomputers after 2005. The biggest reason for the slow development is that Infiniband requires its own dedicated hardware from L2 to L4. The cost of the enterprise is very high.

- RoCE (RDMA over Converged Ethernet) is a protocol for implemtenting RDMA in an Ethernet network for a cheaper RDMA option. RoCE primarily provides RDMA capabilities over Ethernet.

- RoCEv1, which runs at the link layer and cannot be run over a routed network. Therefore, it requires the priority flow control (PFC) to be enabled. The RoCE v1 protocol is an Ethernet link layer protocol allowing two hosts in the same Ethernet broadcast domain (VLAN) to communicate. It uses Ethertype 0x8915, which limits the frame length to 1500 bytes for a standard Ethernet frame and 9000 bytes for an Ethernet jumbo frame.

- RoCEv2, which runs over layer 3. Because it is a routed solution, consider using explicit congestion notification (ECN) with RoCEv2 as ECN bits are communicated end-to-end across a routed network. By changing the packet encapsulation to include IP and UDP headers, RoCE v2 can now be used across both L2 and L3 networks. This enables Layer 3 routing, which brings RDMA to network with multiple subnets for great scalability. Therefore, RoCE v2 is also regarded as Routable RoCE (RRoCE). Owing to the arrival of RoCE v2, the IP multicast is now also possible.

- iWARP iWARP is a protocol for implementing RDMA across Internet Protocol (IP) networks. Its advantage is that it can run in today's standard TCP/IP network. RDMA can be used only by purchasing a network card that supports iWARP. For those with a slightly lower financial budget It is especially suitable for enterprises. But its disadvantage is that it is slightly worse than RoCE in performance. After all, you get what you pay for.

The Difference Between RDMA and TCP/IP[2][3]

| Contents | RoCE | Infiniband | iWARP | TCP/IP |

|---|---|---|---|---|

| High scalability | Good | Excellent | Wore than InfiniBand and RoCE | Poor |

| High performance | Equivalent to InfiniBand | Excellent | Slightly worse than InfiniBand (affected by TCP) | Poor |

| High stability | Good | Excellent | Poor | Excellent |

| Easy management | Hard | Hard | Harder than RoCE | Easy |

| Cost-efficiency | Low | High | Medium | Low |

| Network device | Network device | IB switch | Network device | Network device |

RDMA service

RDMA service in Linux is a technology that allows applications to communicate directly with each other over a network without involving the operating system. It provides high-speed, low-latency data transfer between applications using a low-overhead protocol. This protocol is optimized for a variety of network configurations and can be used for remote direct memory access (RDMA) operations, such as streaming data or memory-mapped files. RDMA service in Linux is designed to reduce the overhead associated with networking and can provide improved performance and scalability. In addition, it can be used to offload certain networking tasks, such as packet processing and network I/O, improving scalability and freeing up the system’s resources for other tasks.

To run RDMA, you must use pinned memory, i.e. memory that cannot be swapped between kernel components. It is recommended that every process that is not a root user run at low memory consumption (64KB). The amount of memory that can be locked must be increased in order for RDMA to function properly.[4]

RDMA supported NIC

To check if your NIC supports RDMA, you can use the lspci command in the Linux terminal, which will list all the PCI devices connected to your system. Additionally, you can look up your NIC’s specifications online to see if it supports RDMA.

GPUDirect RDMA

When training large-scale models in multi-node cluster, the cost of inter-node communication is significant.

The combination of InfiniBand and GPUs enables a feature called GPUDirect RDMA, which allows direct communication between GPUs across nodes without involving memory and CPU. In other words, the communication between GPUs of two nodes takes place directly through the InfiniBand network interface cards, bypassing the need to go through CPU and memory.

References

- ↑ https://www.naddod.com/blog/rdma-high-speed-network-for-large-model-training

- ↑ https://community.fs.com/article/roce-vs-infiniband-vs-tcp-ip.html

- ↑ https://community.fs.com/article/roce-rdma-over-converged-ethernet.html

- ↑ https://www.systranbox.com/checking-for-rdma-capability-on-linux-how-to-determine-if-your-nic-is-rdma-ready/