NUMA: Difference between revisions

No edit summary |

|||

| Line 1: | Line 1: | ||

[[File:UMA vs NUMA.png|right|frameless|312x312px]] | |||

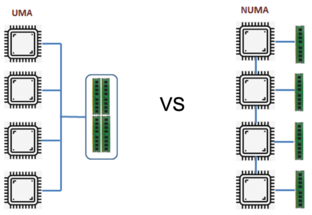

Non-Uniform Memory Access (NUMA) describes a shared memory architecture used in contemporary multiprocessing systems. NUMA technology allow all CPUs to access the entire memory directly. So NUMA systems are quite attractive for server-side applications, such as data mining and decision support systems. Furthermore, writing applications for gaming and high-performance software becomes much easier with this architecture. | Non-Uniform Memory Access (NUMA) describes a shared memory architecture used in contemporary multiprocessing systems. NUMA technology allow all CPUs to access the entire memory directly. So NUMA systems are quite attractive for server-side applications, such as data mining and decision support systems. Furthermore, writing applications for gaming and high-performance software becomes much easier with this architecture. | ||

Revision as of 09:55, 10 April 2023

Non-Uniform Memory Access (NUMA) describes a shared memory architecture used in contemporary multiprocessing systems. NUMA technology allow all CPUs to access the entire memory directly. So NUMA systems are quite attractive for server-side applications, such as data mining and decision support systems. Furthermore, writing applications for gaming and high-performance software becomes much easier with this architecture.

NUMA is a clever system used for connecting multiple central processing units (CPU) to any amount of computer memory available on the computer. The single NUMA nodes are connected over a scalable network (I/O bus) such that a CPU can systematically access memory associated with other NUMA nodes.

Local memory is the memory that the CPU is using in a particular NUMA node. Foreign or remote memory is the memory that a CPU is taking from another NUMA node. The term NUMA ratio describes the ratio of the cost of accessing foreign memory to the cost of accessing local memory. The greater the ratio, the greater the cost, and thus the longer it takes to access the memory.

Local memory access is a major advantage, as it combines low latency with high bandwidth. In contrast, accessing memory belonging to any other CPU has higher latency and lower bandwidth performance. This leads to more efficient use of the 64-bit addressing scheme, resulting in faster movement of data, less replication of data, and easier programming

NUMA Tools in Linux

The Linux kernel has supported NUMA since version 2.5 for process optimization with the two software packages numactl[1] and numad[2].

$numactl --hardware

available: 2 nodes (0-1)

node 0 cpus: 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95

node 0 size: 64328 MB

node 0 free: 26908 MB

node 1 cpus: 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127

node 1 size: 64493 MB

node 1 free: 30551 MB

node distances:

node 0 1

0: 10 32

1: 32 10

- numatop[3] is a useful tool developed by Intel for monitoring runtime memory locality and analyzing processes in NUMA systems. The tool can identify potential NUMA-related performance bottlenecks and hence help to re-balance memory/CPU allocations to maximise the potential of a NUMA system.

Notes - numatop does not support AMD CPU

$sudo apt-get update

$sudo apt-get install numatop

Reference

[[Category:teminology]]