Dual vs Single PCIe Root Configuration: Difference between revisions

No edit summary |

|||

| Line 16: | Line 16: | ||

[[file : Dual-Root.png | right| 150px]] | [[file : Dual-Root.png | right| 150px]] | ||

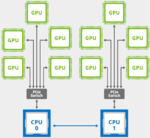

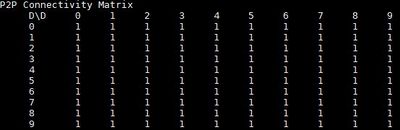

Single root means that each GPU is connected to the same CPU. There are generally PCIe switches to handle this. As you can see from the topology matrix, there are no “SOC” entries. You will notice there are no red SLI connectors on the second CPU. What that means on peer-to-peer connectivity is that we no longer have a matrix of 1’s and 0. This is a popular topology for deep learning servers and we have seen several big data/ AI companies using both versions of the GPU server. We have also seen a large hyper-scale AI company use the single root version of this server with GTX 1080 Ti’s, Titan Xp’s as well as P100’s | Single root means that each GPU is connected to the same CPU. There are generally PCIe switches to handle this. As you can see from the topology matrix, there are no “SOC” entries. You will notice there are no red SLI connectors on the second CPU. What that means on peer-to-peer connectivity is that we no longer have a matrix of 1’s and 0. This is a popular topology for deep learning servers and we have seen several big data/ AI companies using both versions of the GPU server. We have also seen a large hyper-scale AI company use the single root version of this server with GTX 1080 Ti’s, Titan Xp’s as well as P100’s | ||

[[file : Singlelink-example.jpg | center| 400px]] | [[file : Singlelink-example.jpg | center| 400px]] | ||

Latest revision as of 18:21, 5 March 2019

Dual vs Single PCIe Root Configuration Options

in brief - Single vs Dual Root - is the technical term of the difference of the PCIe architecture. One of the advantages of the single-root architecture is being able to use a lower QPI/ UPI speed and a lower-end CPU SKU. PCIe switches do add some latency, however, as you can see there are advantages to using more PCIe switches versus having to traverse QPI/ UPI. moving to a single root PCIe complex also means that one does not need the highest-speed interconnect between CPUs by decoupling the latency from socket-to-socket interconnect performance. AMD EPYC’s architecture GPUs connected to different CPUs and utilize Infinity Fabric interconnect architecture.

Single-Root Configuration

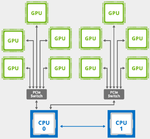

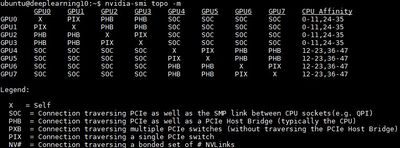

Typical dual-root setup half of the GPUs are on one CPU, the other half are attached to the second CPU. In these systems that is important because it also means that Infiniband placement may be across one or two PCIe switches and across a socket-to-socket QPI link. To mitigate this impact, we have seen deep learning cluster deployments with two Infiniband cards, one attached to each CPU

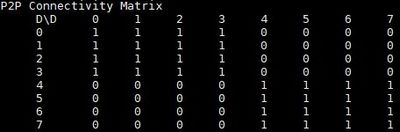

illustration via a picture using the SLI covers - Red and Black SLI covers are on different CPUs. In the topology above that means that they need to traverse QPI and therefore communication is marked as SOC. For peer-to-peer connectivity, this means that GPUs 0-3 and 4-7 can do P2P.

Dual-Root Configuration

Single root means that each GPU is connected to the same CPU. There are generally PCIe switches to handle this. As you can see from the topology matrix, there are no “SOC” entries. You will notice there are no red SLI connectors on the second CPU. What that means on peer-to-peer connectivity is that we no longer have a matrix of 1’s and 0. This is a popular topology for deep learning servers and we have seen several big data/ AI companies using both versions of the GPU server. We have also seen a large hyper-scale AI company use the single root version of this server with GTX 1080 Ti’s, Titan Xp’s as well as P100’s

Single Root v. Dual Root Bandwidth

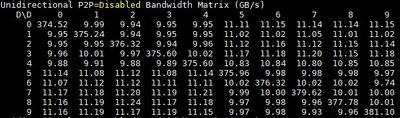

NVIDIA specifically says that the p2pBandwidthLatencyTool is not for performance analysis. With that said, we wanted to get some data out there simply to present a rough idea of what is happening

Unidirectional Bandwidth

Aside from using more GPUs, you will notice that by not having to traverse the QPI bus, there is around a 2x GPU to GPU speed improvement. *Please ignoring GPU7 for these charts as there is something going on

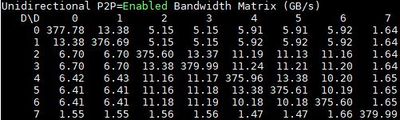

Turning to P2P enabled here is the 8x GPU dual root system:

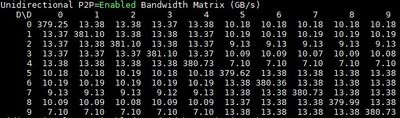

Now let’s take a look at the 10x GPU single-root version:

As you can see the impact of using multiple PCIe switches tiered but the bandwidth gains cannot be ignored.

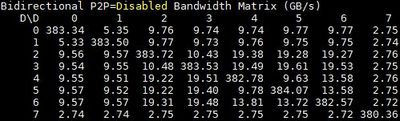

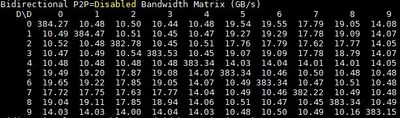

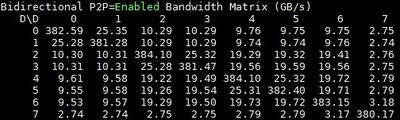

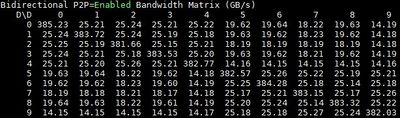

Bi-directional Bandwidth

What happens when we move to bi-directional bandwidth? Here is the 8x GPU dual root system (again see the note above about GPU7):

Here is the 10 GPU system for comparison:

When we turn on P2P we see some gains on the dual root 8x GPU system:

We also see more substantial gains on the single root 10x GPU system:

Single Root v. Dual Root Latency

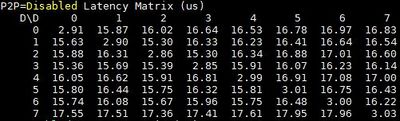

When it comes to latency, here are the P2P disabled 8x GPU results:

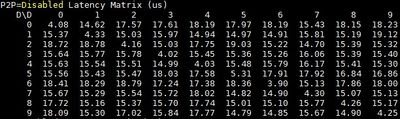

Here are the signle-root 10x GPU results. Note that even with more GPUs on the PCIe switches, the performance is still good.

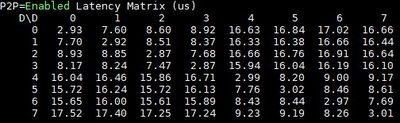

P2P makes a significant latency impact on the dual root system, for GPUs on the same CPU/ PCIe root.

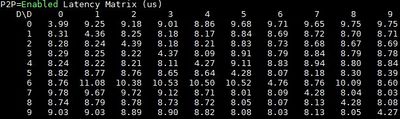

When you flip to P2P with the 10x GPU system, you will notice a fairly dramatic latency drop from the above. this is the key drivers towards single root PCIe systems.

- Reference : https://www.servethehome.com/single-root-or-dual-root-for-deep-learning-gpu-to-gpu-systems/