Eliminating the data link bottleneck

Researchers are developing better interconnects for multi-GPU systems

In brief: Researchers at the University of Illinois at Urbana-Champaign and the University of California, Los Angeles, are developing a wafer-scale computer that aims to be faster and more energy efficient than contemporary counterparts.

As IEEE Spectrum explains, GPUs used in supercomputers typically spread out an application load over hundreds of GPUs found on separate printed circuit boards that communicate with each other via long-haul data links. These data links are a major bottleneck as they are much slower than the interconnects used in the chips themselves.

Furthermore, the “mismatch between the mechanical properties of chips and of printed circuit boards” means that processors must be contained within packages with a limited number of inputs and outputs.

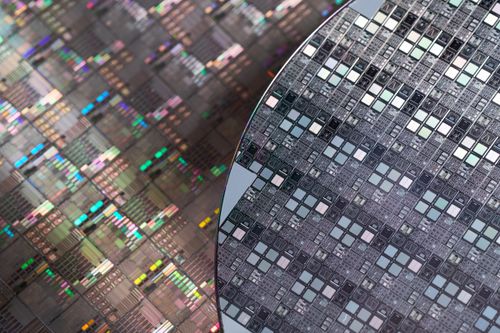

Ideally, you’d want connections between GPU modules that are as fast and energy efficient as the interconnects used on the chips. One way to do that would be to build GPUs all on the same silicon wafer and add interconnects between them. At scale – say, with 40 GPUs – that’d be a recipe for disaster and you’re almost guaranteed to have some sort of manufacturing flaw with a project of that size.

Another idea is to take standard GPU chips that have already passed quality testing and create a new technology to better connect them. That’s precisely what the researchers are doing here through a new tech they’re calling silicon interconnect fabric (SiIF).

With such tight integration, from the prospective of the programmer, it would look like one giant GPU rather than 40 individual GPUs.

Simulations of this multi-GPU monster “sped calculations nearly 19-fold and cut the combination of energy consumption and signal delay more than 140-fold.”

Illinois computer engineering associate professor Rakesh Kumar and his colleagues have already started building a wafer-scale prototype and will present their findings at the IEEE International Symposium on High-Performance Computer Architecture in February.