TensorFlow for AMD ROCm GPU

TensorFlow for AMD ROCm GPU

In brief : ROCm - The Radeon Open Ecosystem - Google Tensorflow announced (on Aug 27, 2018) the release of TensorFlow v1.8 for ROCm-enabled GPUs including the Radeon Instinct™ MI25 GPU by leveraging MIOpen , a library of highly optimized GPU routines for deep learning. This is a major milestone in AMD’s ongoing work to accelerate deep learning. ROCm is an open-source software foundation for GPU computing on Linux.

ROCm - Radeon Open Ecosystem

For a long time, AMD has avoided competing with Nvidia. with AMD products in 2019. Specifically, Computex 2019 should be the place and time when we become familiar with AMD GPU products that are competitive with Nvidia RTX. ROCm is a place where AMD is trying to get in the GPU computing domain. AMD start to provide a pre-built whl package, allowing a simple install akin to the installation of generic TensorFlow for Linux. AMD published installation instructions and also a pre-built Docker image including upstreaming all the ROCm-specific enhancements to the TensorFlow master repository.

AMD's the open source effrots has been also highlighted by selection as a Google Stadia’s Building Blocks. Google has chosen Linux as a base for Stadia. Moreover, it has used the open source Vulkan API for 3D graphics and computing. Some of the other critical open source components being used for Stadia are LLVM, DirectX Shaper Compiler, GAPID, Radeon GPU Profiler, And RenderDoc. Google has also mentioned that a big reason for choosing AMD as its GPU partner is the availability of open source drivers and tools. The other specs of the AMD GPU are 16GB of video memory and 9.5 MB of L2 + L3 cache.

Linus tech tips reviewed it so yeah, i would say it is compting with NVIDIA especially if you look compared to the 1080 ti (it outperforms the 2080 by about 2 percent)

ROCm Platform and Features

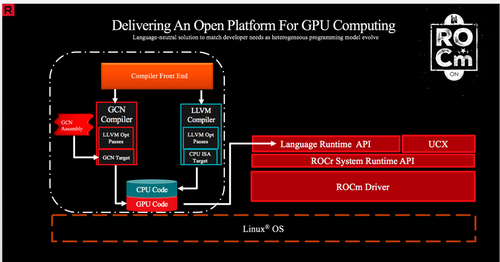

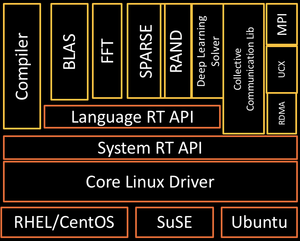

ROCm is the first open-source HPC/Hyperscale-class platform for GPU computing that’s also programming-language independent. The ROCr System Runtime is language independent and makes heavy use of the Heterogeneous System Architecture (HSA) Runtime API. This approach provides a rich foundation to exectute programming languages such as HCC C++ and HIP, the Khronos Group’s OpenCL, and Continuum’s Anaconda Python.

ROCm features

- Multi-GPU coarse-grain shared virtual memory

- Process concurrency and preemption

- Large memory allocations

- HSA signals and atomics

- User-mode queues and DMA

- Standardized loader and code-object format

- Dynamic and offline-compilation support

- Peer-to-peer multi-GPU operation with RDMA support

- Profiler trace and event-collection API

- Systems-management API and tools

More detailed technical document about ROMc is available at https://rocm-documentation.readthedocs.io/en/latest/index.html

ROCm oppertunities

Nvidia has Geforce Now, with "big" data center partners like Softbank and LG. Google, Microsoft, and AWS better watch out. Based on usages, some customers are definitely demanding CPU+GPU interconnection and coherency. Nvidia's AI plans seems to be failing as Google makes its own tensor processing units and Tesla as well. Even Amazon is developing its own ASIC optimized for its workload. Nvidia's data center GPUs are way too expensive and not suited for these customers' specific needs. Althogh NVIDIA's proprietary CUDA ecosystem is great if your products are dominant, otherwise it may becomes a minus when AMD GPUs are equal/better. And for customers on the exascale, open source is always best way to go. by entering AI era, accelerating growth that looks basically guaranteed for AMD.