Ultra Accelerator Link (UALink)

Ultra Accelerator Link (UALink)

AMD, Broadcom, Google, Intel, Meta, and Microsoft all develop their own AI accelerators (well, Broadcom designs them for Google), Cisco produces networking chips for AI, while HPE builds servers. These companies are interested in standardizing as much infrastructure for their chips as possible, which is why they are teaming up to develop a new industry standard dedicated to advancing high-speed and low-latency communication for scale-up AI Accelerators. Called the Ultra Accelerator Link (UALink)[1]

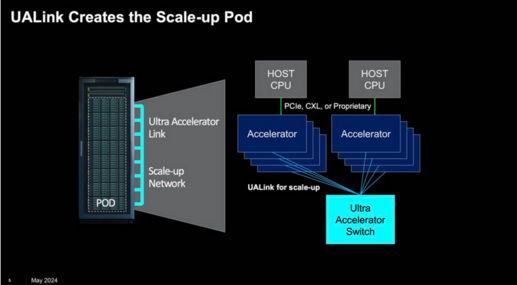

The UALink initiative is designed to create an open standard for AI accelerators to communicate more efficiently. The first UALink specification, version 1.0, will enable the connection of up to 1,024 accelerators within an AI computing pod in a reliable, scalable, low-latency network. This specification allows for direct data transfers between the memory attached to accelerators, such as AMD's Instinct GPUs or specialized processors like Intel's Gaudi, enhancing performance and efficiency in AI compute.

By standardizing the open interconnect for AI and HPC accelerators, it will be easier for system OEMs, IT professionals, and system integrators to integrate and scale AI systems in datacenters. The standard aims to promote an open ecosystem and facilitate the development of large-scale AI and HPC solutions.[2]

As open industry standard, UALink should help bring it to market faster as there will be less IP to haggle over, but an optimistic 2026 release still seems rather far off, given the need for massive AI GPU matrix engines yesterday.

UALink is expecting to be an industry wide open standard that to eleminate Nvidia only closed NVlink dependency. Nvidia's NVLink, a GPU-to-GPU connection, can transfer data at 1.8 terabytes per second between GPUs as of Jun'24. There is also an NVLink rack-level Switch capable of supporting up to 576 fully connected GPUs in a non-blocking compute fabric. GPUs connected via NVLink are called “pods” to indicate they have their own data and computational domain.